CICD

DevOps

Development(开发)和 Operations(运维)的组合,是⼀种⽅法论,是⼀组过程、⽅法与系统的统称,⽤于促进应⽤开发DEV、应⽤运维OPS和质量保障(QA)部⻔之间的沟通、协作与整合,以期打破传统开发和运营之间的壁垒和鸿沟;

通过⾃动化软件交付和架构变更的流程,来使得构建、测试、发布软件能够更加地快捷、频繁和可靠;具体来说,就是在软件交付和部署过程中提⾼沟通与协作的效率,旨在更快、更可靠的的发布更⾼质量的产品;

DevOps并不指代某⼀特定的软件⼯具或软件⼯具组合;各种⼯具软件或软件组合可以实现 DevOps 的概念⽅法,与软件开发中设计到的 OOP、AOP、IOC(或DI)等类似,是⼀种理论或过程或⽅法的抽象或代称。当下容器化技术与K8S是DevOps的核⼼

CI/CD

通过将⾃动化引⼊应⽤程序开发阶段来频繁向客户交付应⽤程序的⽅法。主要概念是持续集成、持续交付和持续部署。

解决集成新代码可能给开发和运营团队带来的问题(⼜名“集成地狱”)的解决⽅案。

CI指持续集成,开发⼈员的⾃动化过程。成功的CI指对应⽤程序的新代码更改会定期构建、测试并合并到共享存储库。解决同时开发的应⽤程序有太多分⽀可能相互冲突的问题的解决⽅案。

CD指持续交付(Continuous Delivery)/持续部署(Continuous Deployment),两者都是关于⾃动化流⽔线的进⼀步阶段,但有时会单独使⽤它们来说明正在发⽣的⾃动化程度。持续交付意味着开发⼈员对应⽤程序的更改会⾃动进⾏错误测试并上传到存储库(如 GitHub 或容器注册表),然后运维团队可以将它们部署到实时⽣产环境中。这是对开发团队和业务团队之间可⻅性和沟通不佳问题的解决⽅案。⽬的是确保以最少的努⼒部署

新代码。

持续部署指⾃动将开发⼈员的更改从存储库发布到⽣产环境,供客户使⽤。减少⼈⼯部署。通过⾃动化管道中的下⼀阶段来构建持续交付的优势。

持续集成 (CI) 帮助开发⼈员更频繁地将他们的代码更改合并回共享分⽀或“主⼲”。合并开发⼈员对应⽤程序的更改后,将通过⾃动构建应⽤程序并运⾏不同级别的⾃动化测试(通常是单元测试和集成测试)来验证这些更改,以确保更改不会破坏应⽤程序。这意味着测试从类和函数到构成整个应⽤程序的不同模块的所有内容。如果⾃动化测试发现新代码和现有代码之间存在冲突,CI可以更轻松地快速、频繁地修复这些错误。

持续交付CD:⾃动将经过验证的代码发布到存储库。⽬标是拥有⼀个始终准备好部署到⽣产环境的代码库。在持续交付中,从合并代码更改到交付⽣产就绪版本的每个阶段都涉及测试⾃动化和代码发布⾃动化。在该过程结束时,运营团队能够快速轻松地将应⽤程序部署到⽣产环境。

持续部署CD:⾃动将⽣产就绪的构建发布到代码存储库,⾃动将应⽤程序发布到⽣产环境。因为在⽣产前的流⽔线阶段没有⼈⼯⻔,依赖于精⼼设计的测试⾃动化。

持续部署意味着开发⼈员对云应⽤程序的更改可以在编写后⼏分钟内⽣效(假设通过了⾃动化测试)。使得持续接收和整合⽤户反馈变得更容易。降低了应⽤程序部署的⻛险,从⽽更容易以⼩块的形式发布对应⽤程序的更改,⽽不是⼀次全部发布。

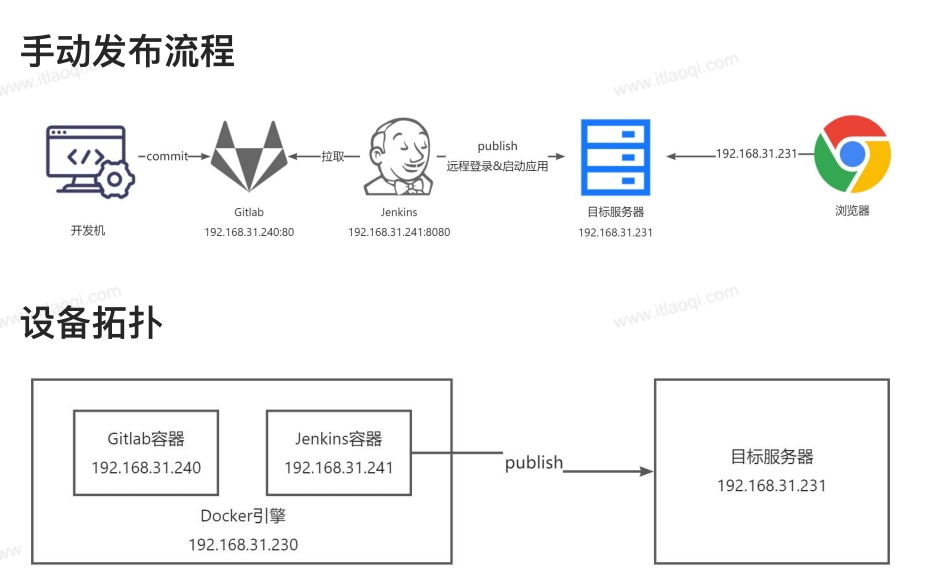

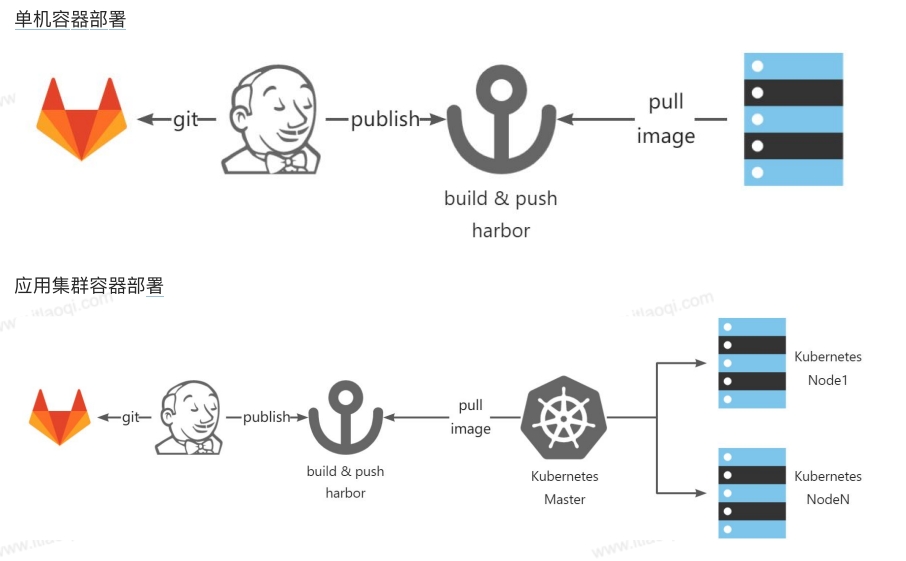

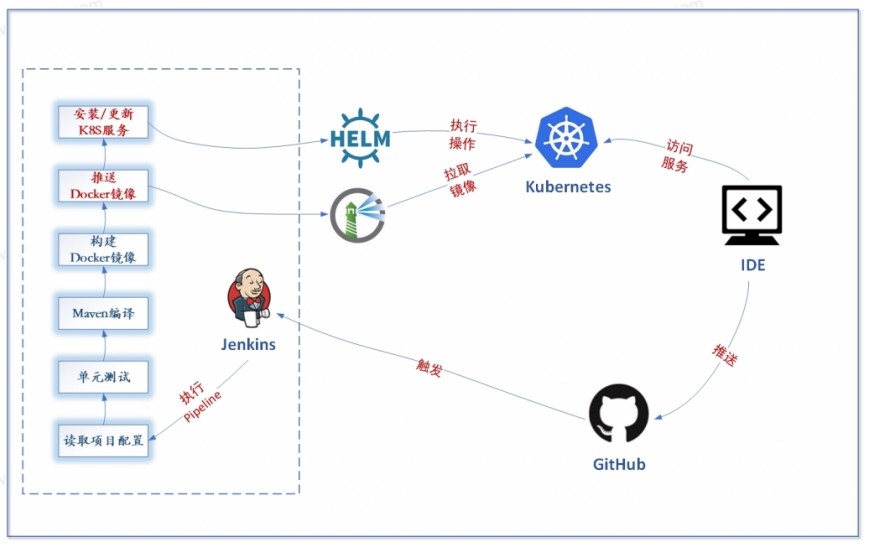

代码上传gitlab --> 触发jenkins构建--> 拉取项目、maven构建jar包、基于项目内的dockerfile进行docker build ---> 产出物docker镜像推送至harbor--->镜像地址等一些常规数据渲染至k8s的资源对象清单--->提交apiserver --->jenkins控制台打印访问地址

Helm 与 Helm Chart

想要部署应⽤程序到云上,⾸先要准备好需要的环境,打包成 Docker 镜像,把镜像放在部署⽂件 (Deployment) 中、配置服务 (Service)、应⽤所需的账户 (ServiceAccount) 及权限 (Role)、命

名空间 (Namespace)、密钥信息 (Secret)、可持久化存储(PersistentVolumes) 等资源。编写⼀系列互相相关的 YAML 配置⽂件,部署在 Kubernetes 集群上。

基于 Kubernetes 的应⽤包管理⼯具Helm

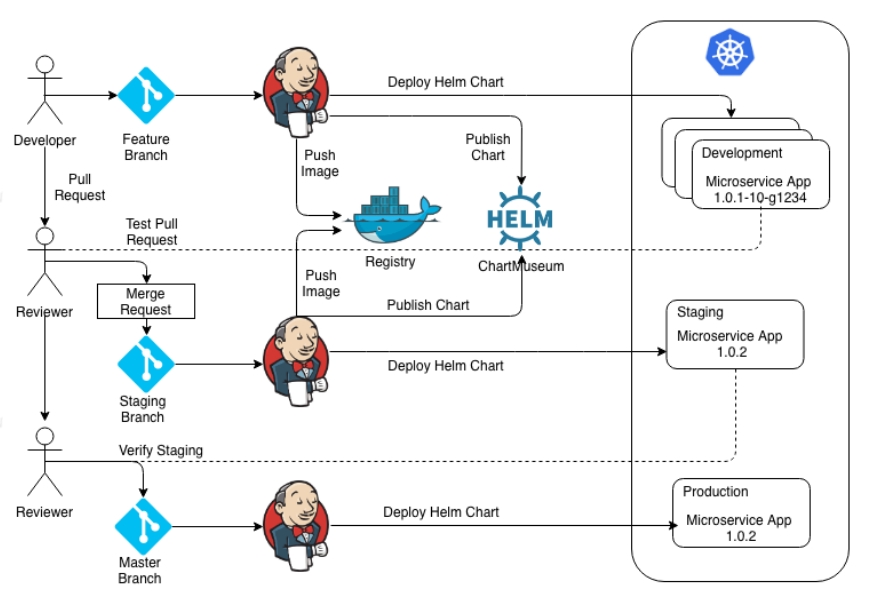

第1阶段:开发⼈员对代码进⾏更改并在本地进⾏测试,然后将更改推送到 git,这将触发 Jenkins 管道。Pipeline 将构建新的 docker 镜像并使⽤ git describe 命令的输出对其进⾏标记,并将其推送到 docker注册中⼼仓库。Helm Chart代码中更新了相同的标签,并且Helm Chart代码也被推送到Chart仓库。最后,部署是通过 Jenkins 在开发的Namespace中针对新版本的Chart运⾏ helm update命令来完成开发环境部署。最后,开发者提出拉取请求

第 2 阶段:Reviewer 审查代码并在满意后接受并合并到发布分⽀。版本号也可以通过删除所有后缀来更新,⽅法是根据其主要/次要/路径版本增加它,使⽤新标签创建新的 Docker Image镜像,并且使⽤相同的标签更新 helm chart。Jenkins 然后在 staging namespace中运⾏helm update 以便其他⼈可以验证

第 3 阶段:如果 staging 看起来不错,最后⼀步是将更改推送到⽣产中。在这种情况下,Jenkins 管道不会创建新的Docker Image镜像,它只使⽤最后⼀个 helm 图表版本并更新⽣产命名空间中的图表

安装部署过程

192.168.1.155:harbor服务器(不能通过macvlan网络部署、端口映射一坨屎) Docker、Docker compose、harbor

192.168.1.176:生产服务器 Docker、Docker compose、jdk

192.168.1.184:jenkins(192.168.1.241:8080)、gitlab(192.168.1.240)服务器(通过macvlan网络,注意不能端口映射) Docker、Docker Compose、jdk、mvn。

192.168.1.177 kubeode服务器

184安装Docker、Docker Compose、jdk

- 推荐配置:2核CPU,8G内存,CentOS7

# 启用 IP 转发,支持路由或容器集群间通信。

# 优化虚拟内存区域映射数,支持高内存需求的应用(如 Elasticsearch)。

# 临时关闭防火墙,以避免防火墙规则对网络调试的干扰。

cat >> /etc/sysctl.conf <<-'EOF'

net.ipv4.ip_forward=1

vm.max_map_count=655360

EOF

sysctl -p

systemctl stop firewalld

# 安装底层工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

# 加⼊阿⾥云yum仓库提速docker下载过程

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 更新⼀下仓库的源信息

sudo yum makecache fast

# ⾃动安装下载Docker

sudo yum -y install docker-ce

# 启动Docker服务

sudo service docker start

# 验证docker是否启动成功

docker version

# Aliyun加速镜像下载或者设置代理

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://fskvstob.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

# 安装OpenJDK8+

yum -y install java-1.8.0-openjdk-devel.x86_64

sudo cat >> /etc/profile <<-'EOF'

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

EOF

source /etc/profile

echo $JAVA_HOME

# 根据ifconfig 创建docker网络 开启Macvlan:使每个容器ip和端口独立,能在宿主机访问

docker network create -d macvlan \

--subnet=192.168.1.0/24 \

--ip-range=192.168.1.0/24 \

--gateway=192.168.1.1 \

-o parent=ens33 \

-o macvlan_mode=bridge \

macvlan31

# 查看结果

ip addr show macvlan0

41: macvlan0@ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 36:a4:b7:e0:8b:56 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.100/24 scope global macvlan0

valid_lft forever preferred_lft forever

inet6 2409:8a55:713:a450:34a4:b7ff:fee0:8b56/64 scope global mngtmpaddr dynamic

valid_lft 86370sec preferred_lft 86370sec

inet6 fe80::34a4:b7ff:fee0:8b56/64 scope link

valid_lft forever preferred_lft forever

# 删除 ip addr del 192.168.1.100/24 dev macvlan0

184安装gitlab(相当于github)分配ip240,不要与主机和虚拟机ip冲突

# 生成目录并授权

rm -rf /etc/gitlab

rm -rf /var/log/gitlab

rm -rf /var/opt/gitlab

docker rm -f gitlab

mkdir -p /etc/gitlab

mkdir -p /var/log/gitlab

mkdir -p /var/opt/gitlab

chmod -R 755 /etc/gitlab

chmod -R 755 /var/log/gitlab

chmod -R 755 /var/opt/gitlab

# 安装gitlab容器

docker run --name gitlab \

--hostname gitlab.example.com \

--restart=always \

--network macvlan31 --ip=192.168.1.240 \

-v /etc/gitlab:/etc/gitlab \

-v /var/log/gitlab:/var/log/gitlab \

-v /var/opt/gitlab:/var/opt/gitlab \

-d gitlab/gitlab-ce

docker logs -f gitlab

# 获得密码

docker exec -it gitlab grep 'Password:' /etc/gitlab/initial_root_password

Password: HxWxngkN31ppwQ3kPKpJMpdqP/Z/c0eAepIZm+uGWDs=

# 访问http://192.168.1.240

# 账号 root

# 密码 HxWxngkN31ppwQ3kPKpJMpdqP/Z/c0eAepIZm+uGWDs=

#⽤户头像->Preferences->Password修改初始密码

#username:root

#password:12345678

184安装Jenkins,分配ip241

Jenkins:java语言开发,用于监控持续重复的工作,包括:持续的软件版本发布/测试项目,监控外部调用执行的工作。

cd /usr/local

# 安装jdk

wget --no-check-certificate https://manongbiji.oss-cn-beijing.aliyuncs.com/ittailkshow/devops/download/jdk-8u341-linux-x64.tar.gz

tar zxvf jdk-8u341-linux-x64.tar.gz

mv jdk1.8.0_341 jdk

rm -f jdk-8u341-linux-x64.tar.gz

cd /usr/local

# 安装maven

wget --no-check-certificate https://manongbiji.oss-cn-beijing.aliyuncs.com/ittailkshow/devops/download/apache-maven-3.8.6-bin.tar.gz

tar zxvf apache-maven-3.8.6-bin.tar.gz

mv apache-maven-3.8.6 maven

rm -f apache-maven-3.8.6-bin.tar.gz

cd /usr/local/maven/conf

rm -f settings.xml

wget --no-check-certificate https://manongbiji.oss-cn-beijing.aliyuncs.com/ittailkshow/devops/download/settings.xml

# 修改了以下内容

<mirrors>

<mirror>

<id>alimaven</id>

<name>aliyun maven</name><url>https://maven.aliyun.com/repository/public</url>

<mirrorOf>central</mirror0f>

</mirror>

</mirrors>

# 安装jenkins,记得修改jdk目录

rm -rf /var/jenkins/

docker rm -f jenkins

mkdir -p /var/jenkins/

chmod -R 777 /var/jenkins/

docker run --name jenkins \

--restart=always \

--network macvlan31 --ip=192.168.1.241 \

-v /var/jenkins/:/var/jenkins_home/ \

-v /usr/local/jdk:/usr/local/jdk \

-v /usr/local/maven:/usr/local/maven \

-e JENKINS_UC=https://mirrors.cloud.tencent.com/jenkins/ \

-e JENKINS_UC_DOWNLOAD=https://mirrors.cloud.tencent.com/jenkins/ \

-d jenkins/jenkins:2.442-jdk17

#国内常⽤的Jenkins插件安装源

# tencent https://mirrors.cloud.tencent.com/jenkins/

# huawei https://mirrors.huaweicloud.com/jenkins/

# tsinghua https://mirrors.tuna.tsinghua.edu.cn/jenkins/

# ustc https://mirrors.ustc.edu.cn/jenkins/

# bit http://mirror.bit.edu.cn/jenkins/

# 查看密码

docker logs -f jenkins

Jenkins initial setup is required. An admin user has been created and a password generated.

Please use the following password to proceed to installation:

dc6e3167a27e4823a043a10b9d96d240

This may also be found at: /var/jenkins_home/secrets/initialAdminPassword

访问http://192.168.1.241:8080,将上面的密码贴进去

等待初始化=>选“选择插件来安装”选项=>点击安装=>等待安装

初始化用户名密码root,邮箱随便x@163.com=>保存并完成

设置访问地址http://192.168.1.241:8080,或者是有域名的=>保存并完成

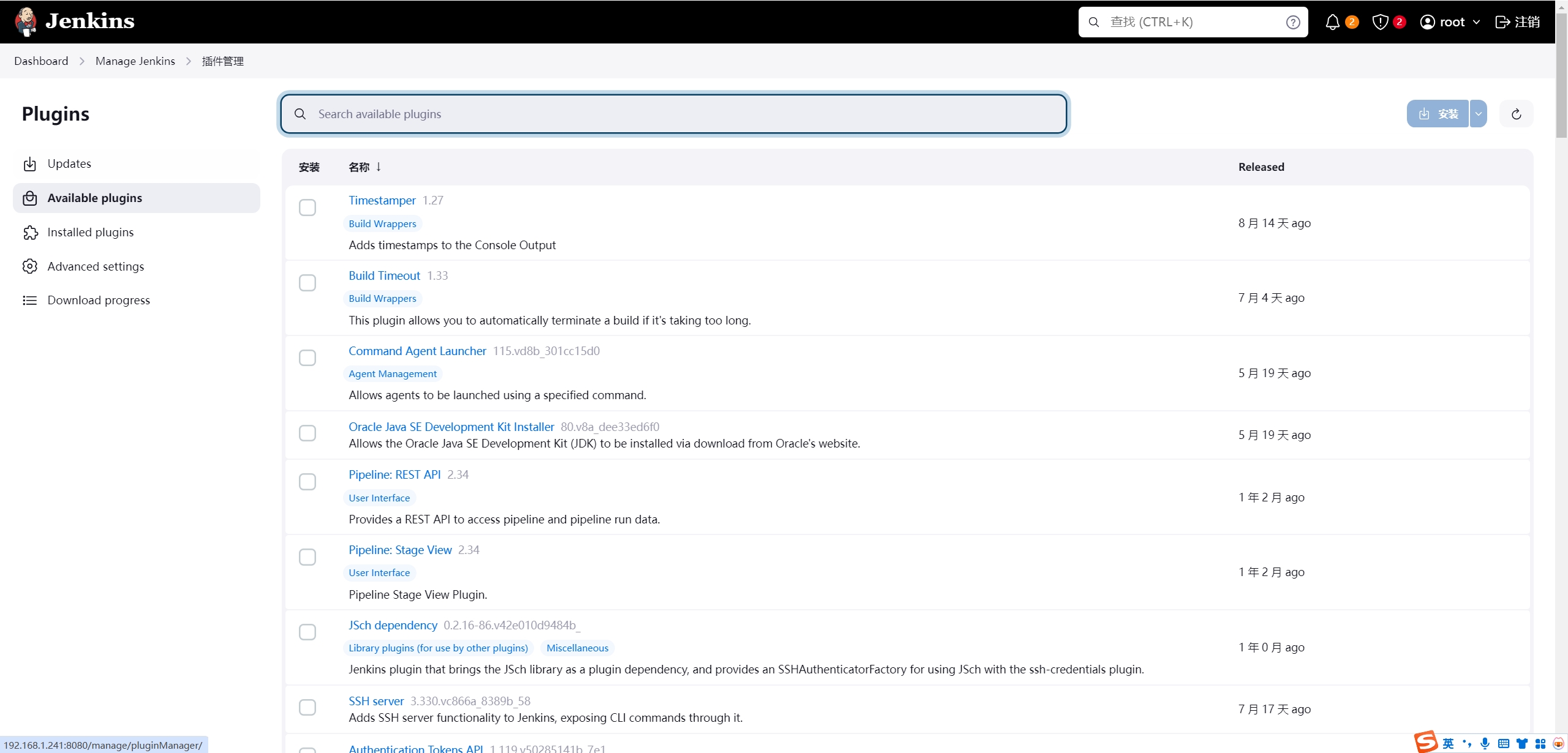

⾸⻚点击Manage Jenkins _>Manage Plugins管理插件

在可选插件栏-添加Git Parameter与Publish Over SSH 两款插件即可

点击Download and install restart now等待重启,点击安装完成后重启Jenkins

系统管理-全局工具配置-设置jdk和maven的地址

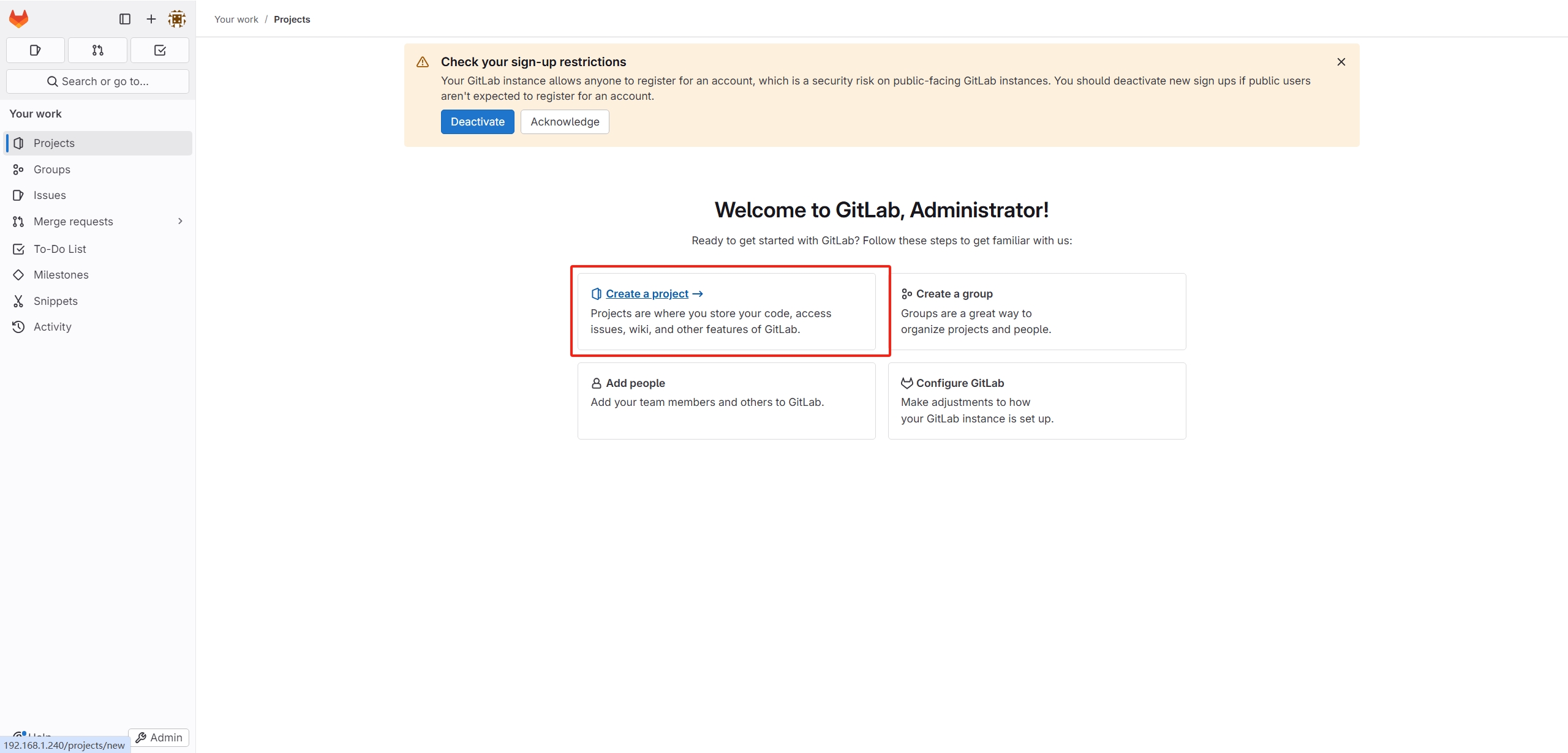

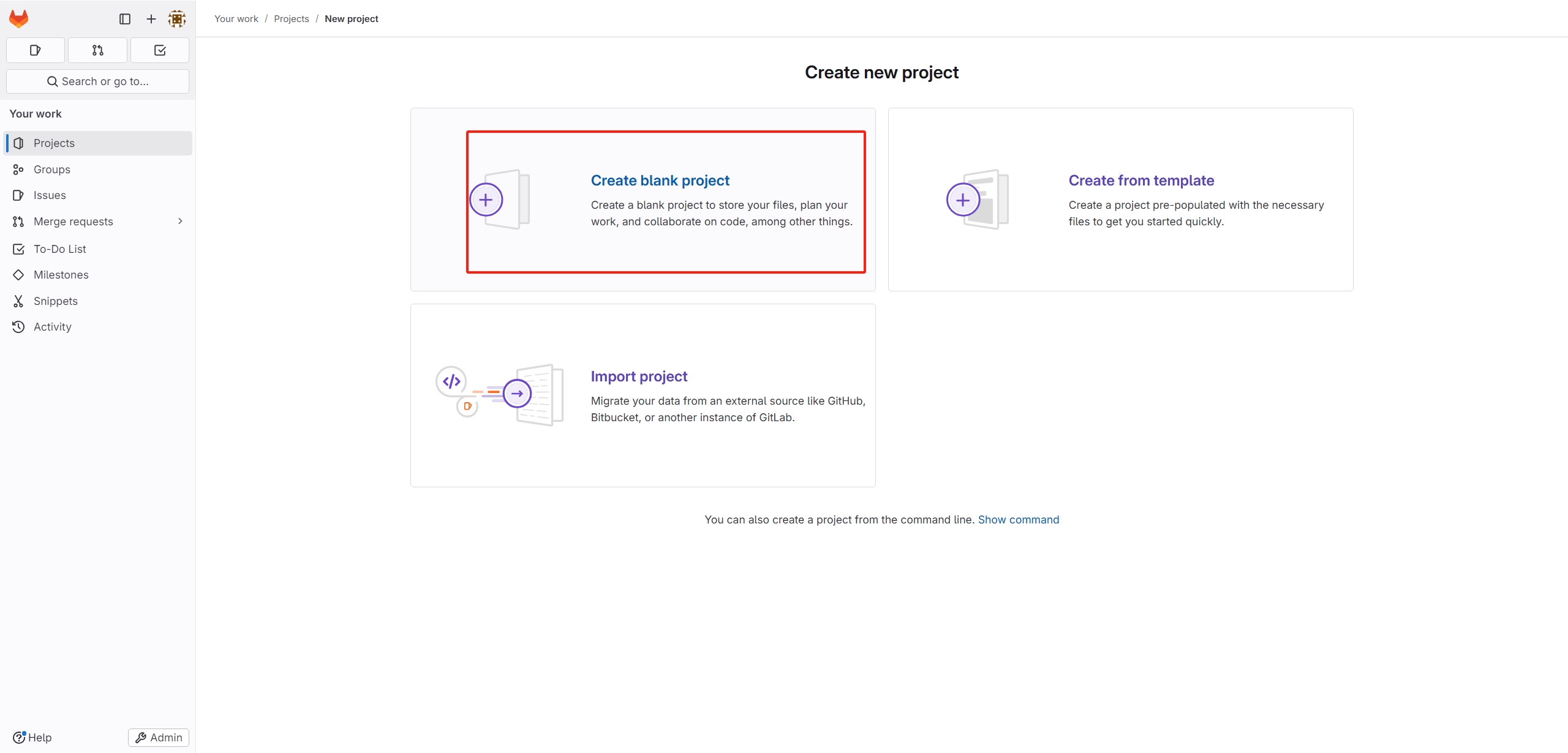

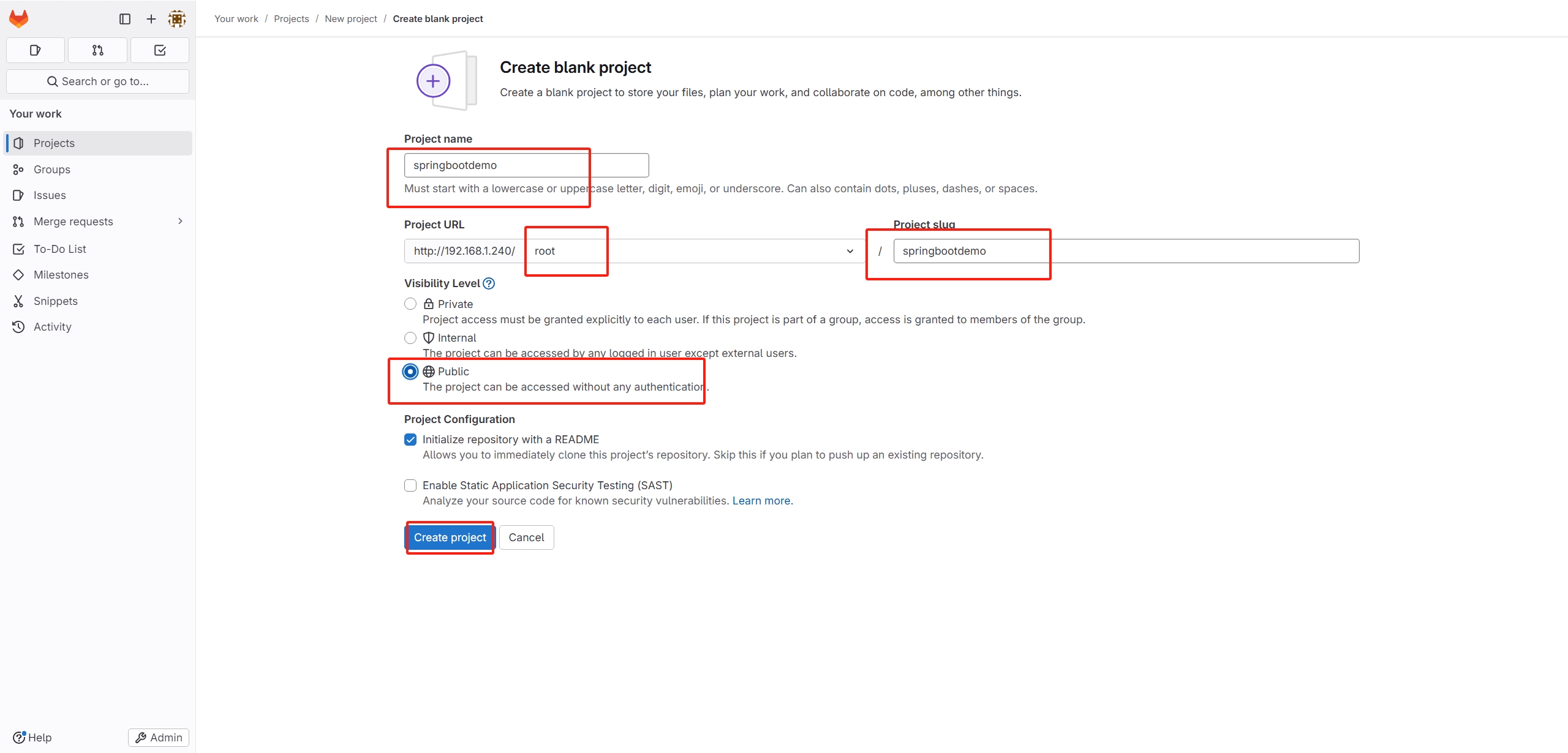

gitlab创建项目

# 下载代码

git clone http://192.168.1.240/root/springbootdemo.git

# 添加文件并提交

git add .

git commit -m "first-commit"

176安装docker、docker、Compose、jdk

# 安装OpenJDK8+

# 2核CPU,最少4G内存,CentOS 7

yum -y install java-1.8.0-openjdk-devel.x86_64

sudo cat >> /etc/profile <<-'EOF'

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

EOF

source /etc/profile

echo $JAVA_HOME

jekins设置

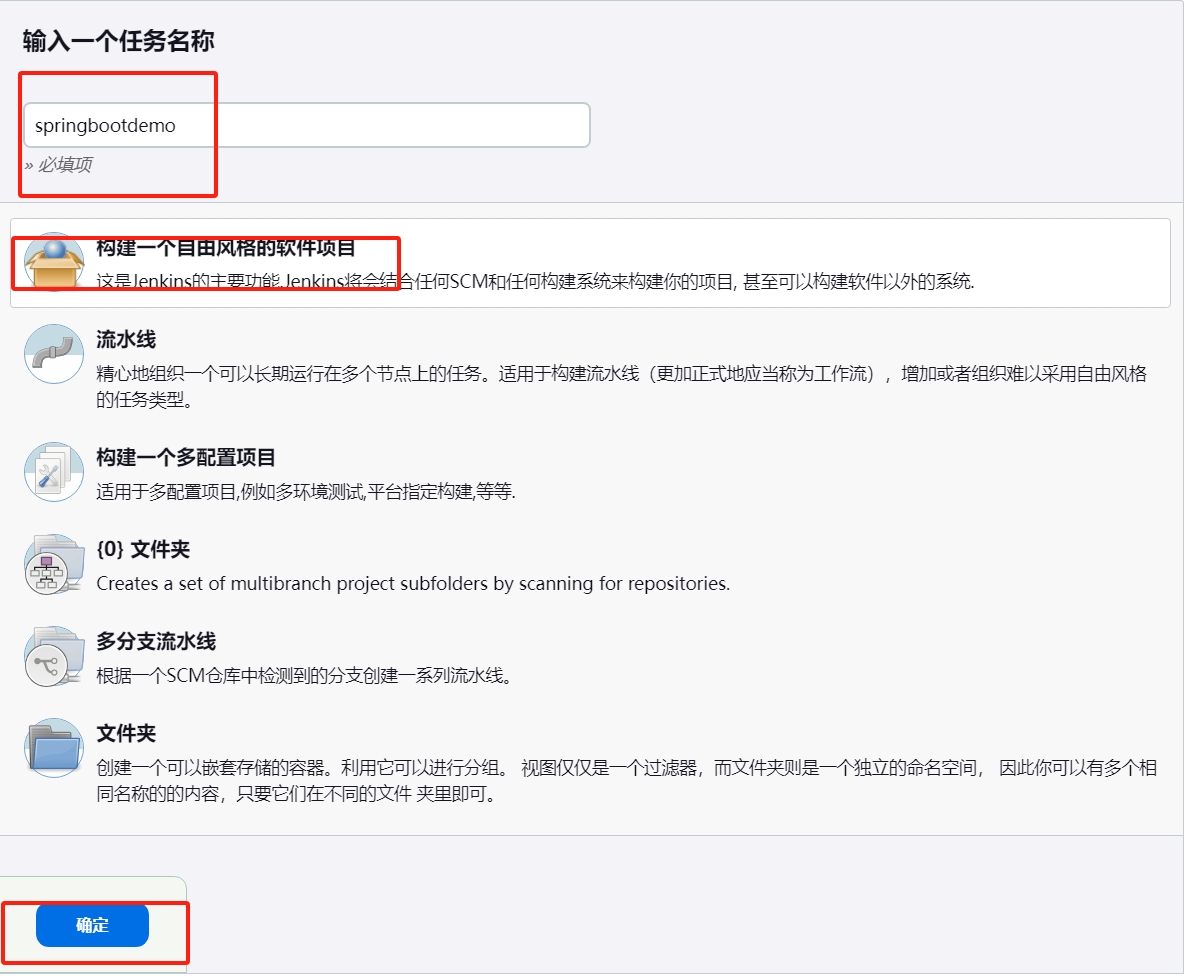

新建项目名为springbootdemo选择⾃由⻛格

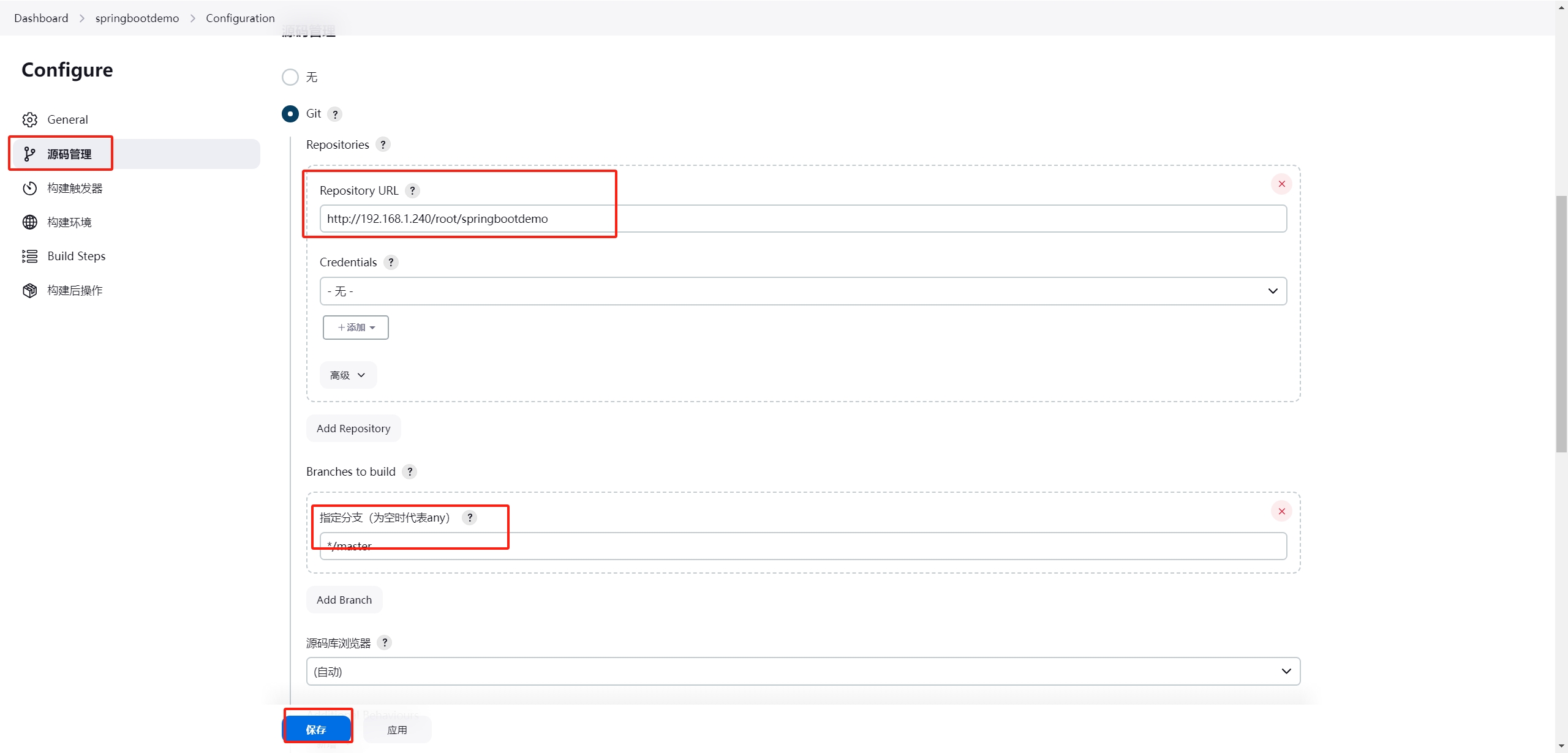

源码管理=>Repository URL:http://192.168.1.240/root/springbootdemo=>指定分支(为空时代表any)*/master

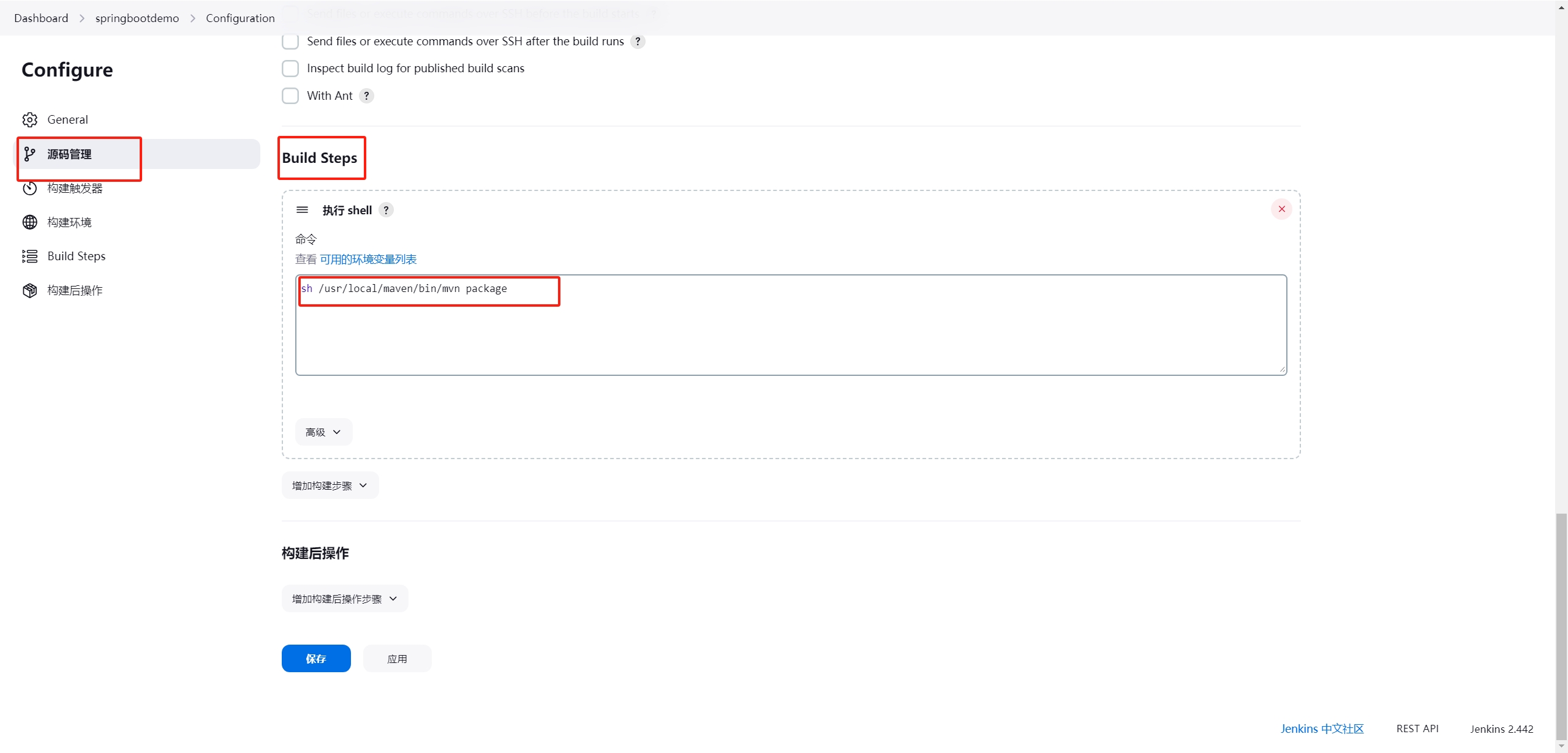

Build Steps=>添加构建步骤=>执行shell=>应用并保存

sh /usr/local/maven/bin/mvn package

# 拉取代码所在目录,因为之前Jenkins卷已经挂载到外面了

cd /var/jenkins/workspace

ls

springbootdemo

# 里面有target

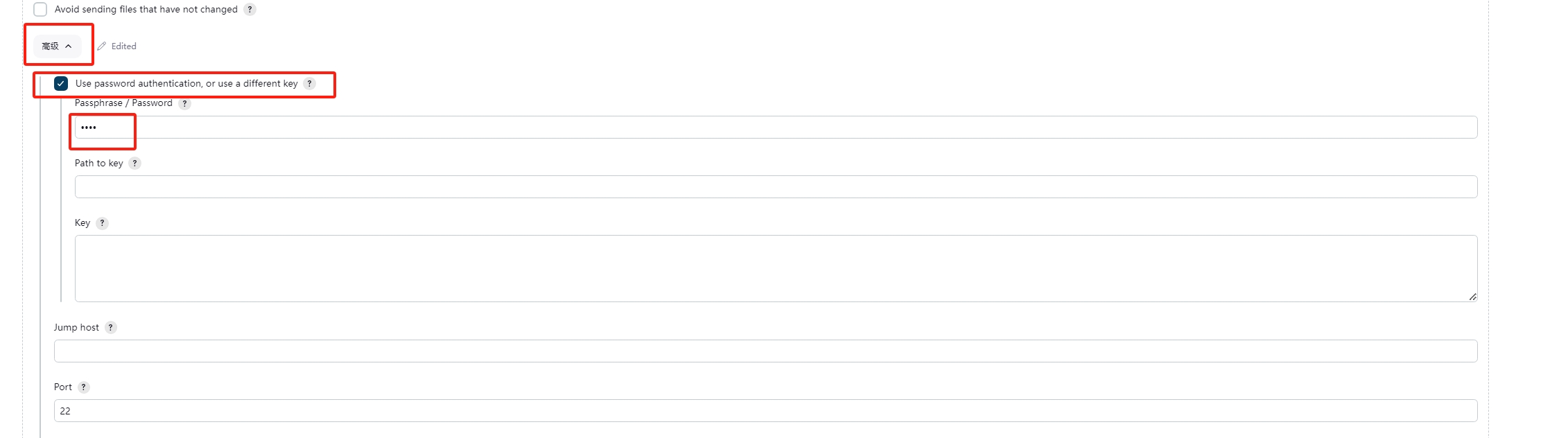

配置176⽬标服务器,点击主界⾯系统管理的系统配置找到Public Over SSH,新增⼀个SSH Server

Name:Target-176

Hostname:192.168.1.176

Username:root

Remote Directory:/usr/local

点击高级=>勾选=>Use password authentication, or use a different key=>输入密码Password: root=>保存

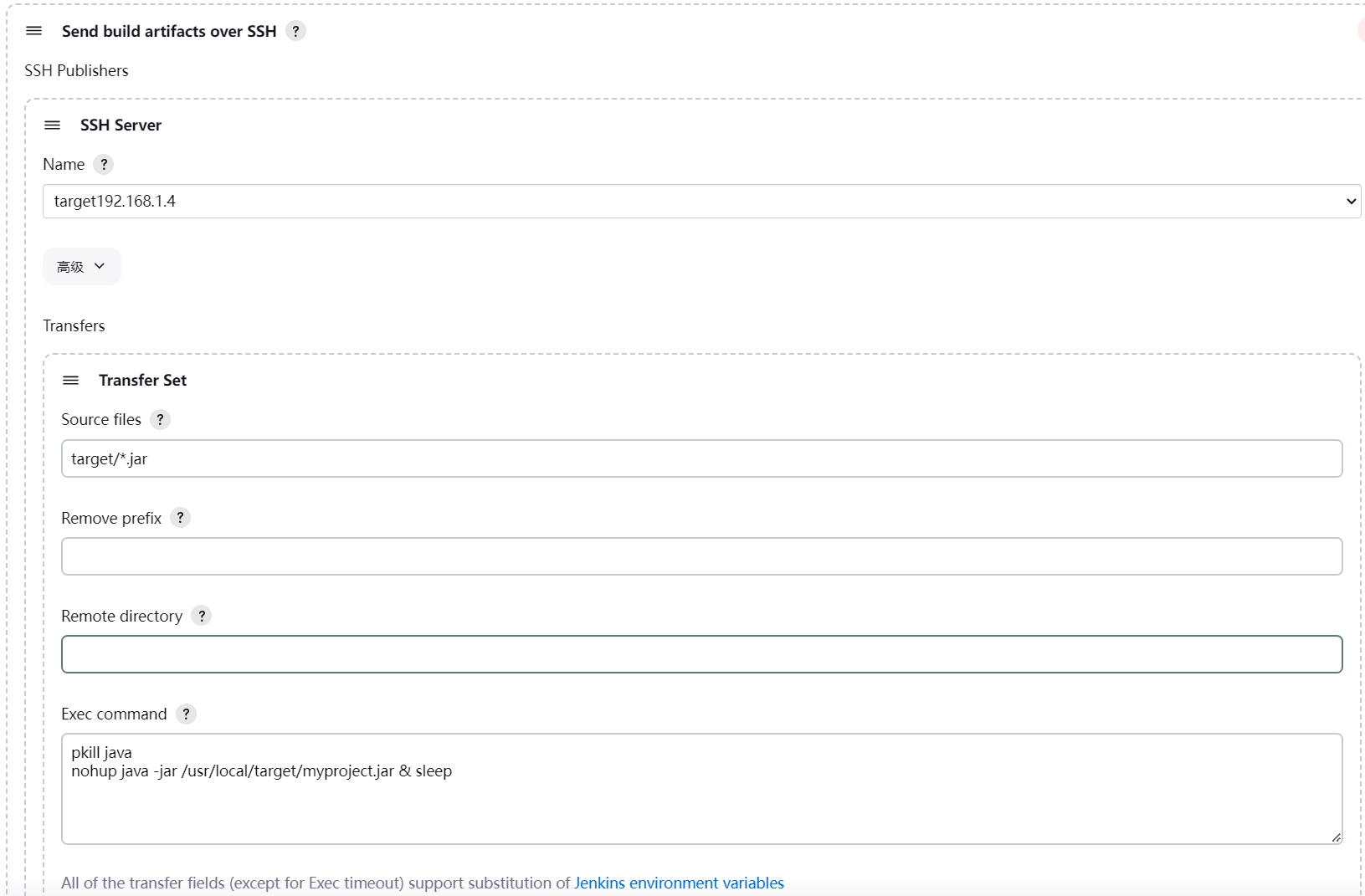

选择构建后操作“Send build artifacts over SSH”向231服务器发布jar包并运⾏

SSH Server:

name:Target-176

Source files:target/*.jar

Exec command:

ps -ef |grep java |grep -w springbootdemo-0.0.1-SNAPSHOT.jar|grep -v 'grep'|awk '{print $2}'| xargs -i{} kill -9 {} && \

sleep 1 && nohup java -server -jar /usr/local/target/springbootdemo-0.0.1-SNAPSHOT.jar --server.port=8888 &

注意上面的命令在176上能运行才行

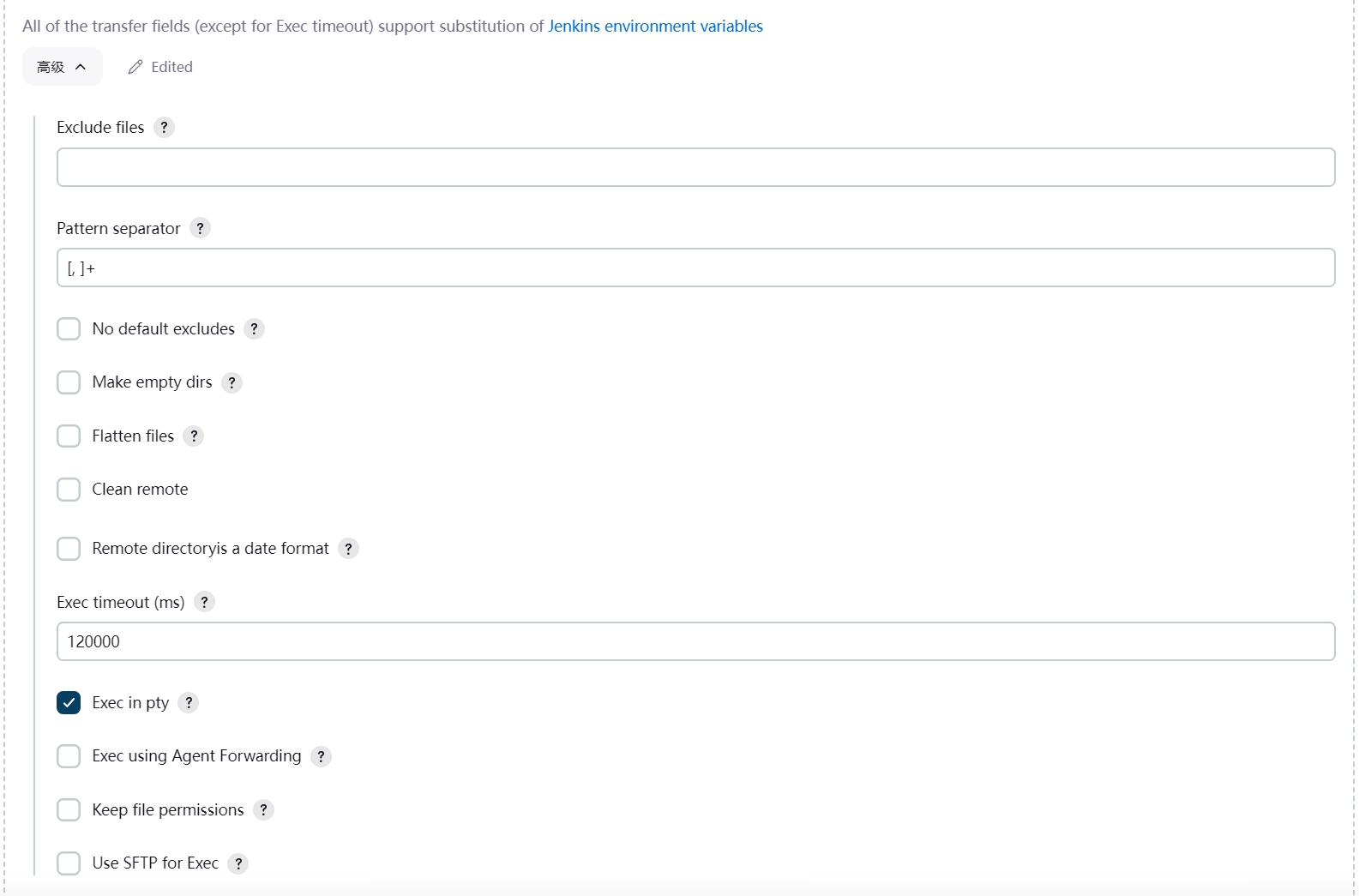

勾选⾼级->Exec in pty(⾮常重要)=>应用=>保存

再次执⾏构建,访问http://192.168.1.176

可以看到已经⾃动构建并⾃动启动应⽤

⾃动构建Docker镜像

176安装docker

项目目录下创建docker文件夹并创建Dockerfile文件,用于构建镜像

FROM openjdk:8-slim

WORKDIR /usr/local

COPY springbootdemo-0.0.1-SNAPSHOT.jar .

CMD java -jar springbootdemo-0.0.1-SNAPSHOT.jar

确认并上传到gitlab

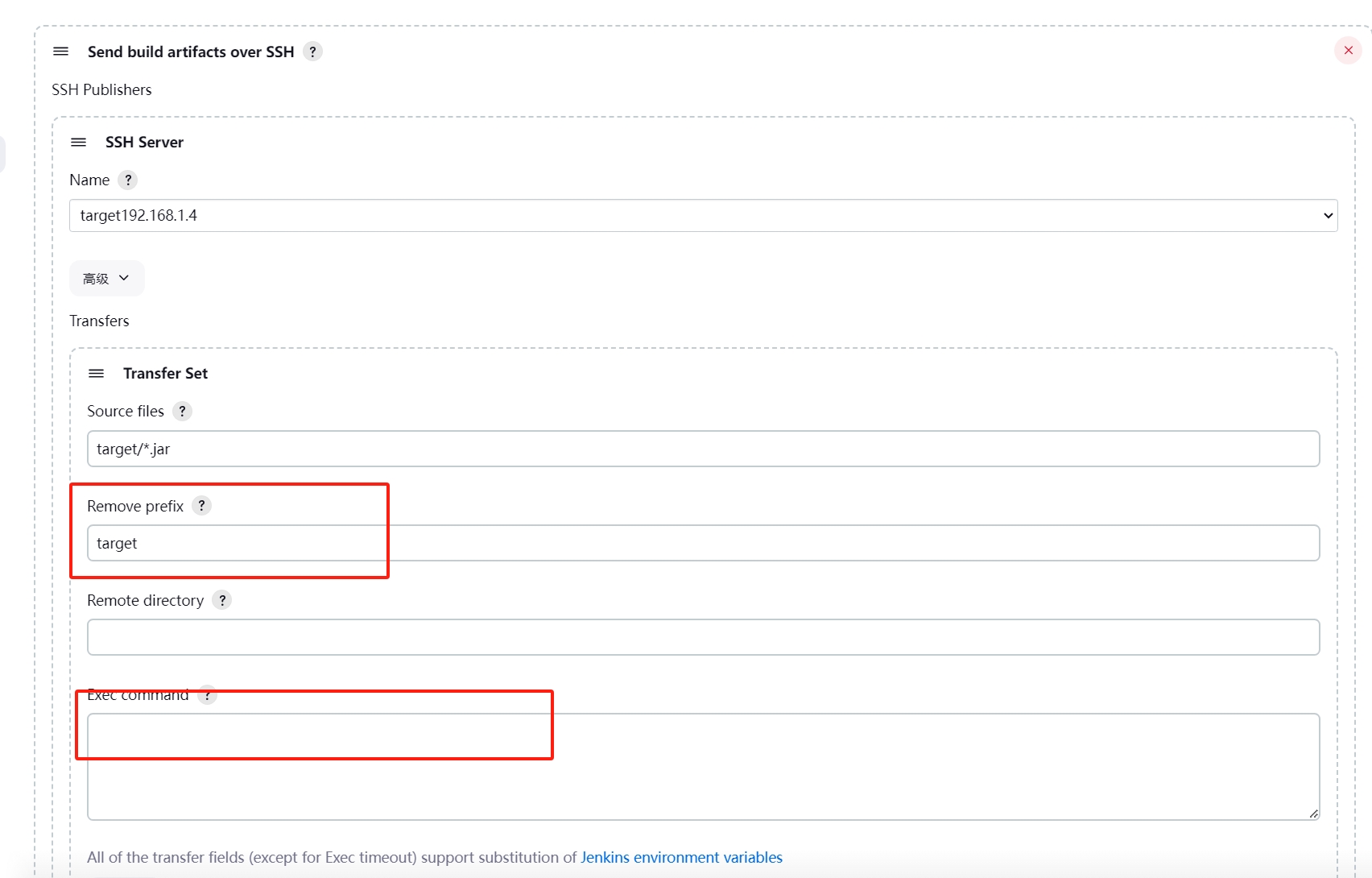

回到Jenkins,找到构建后操作,删除Exec command所有内容

添加Remove prefix:target

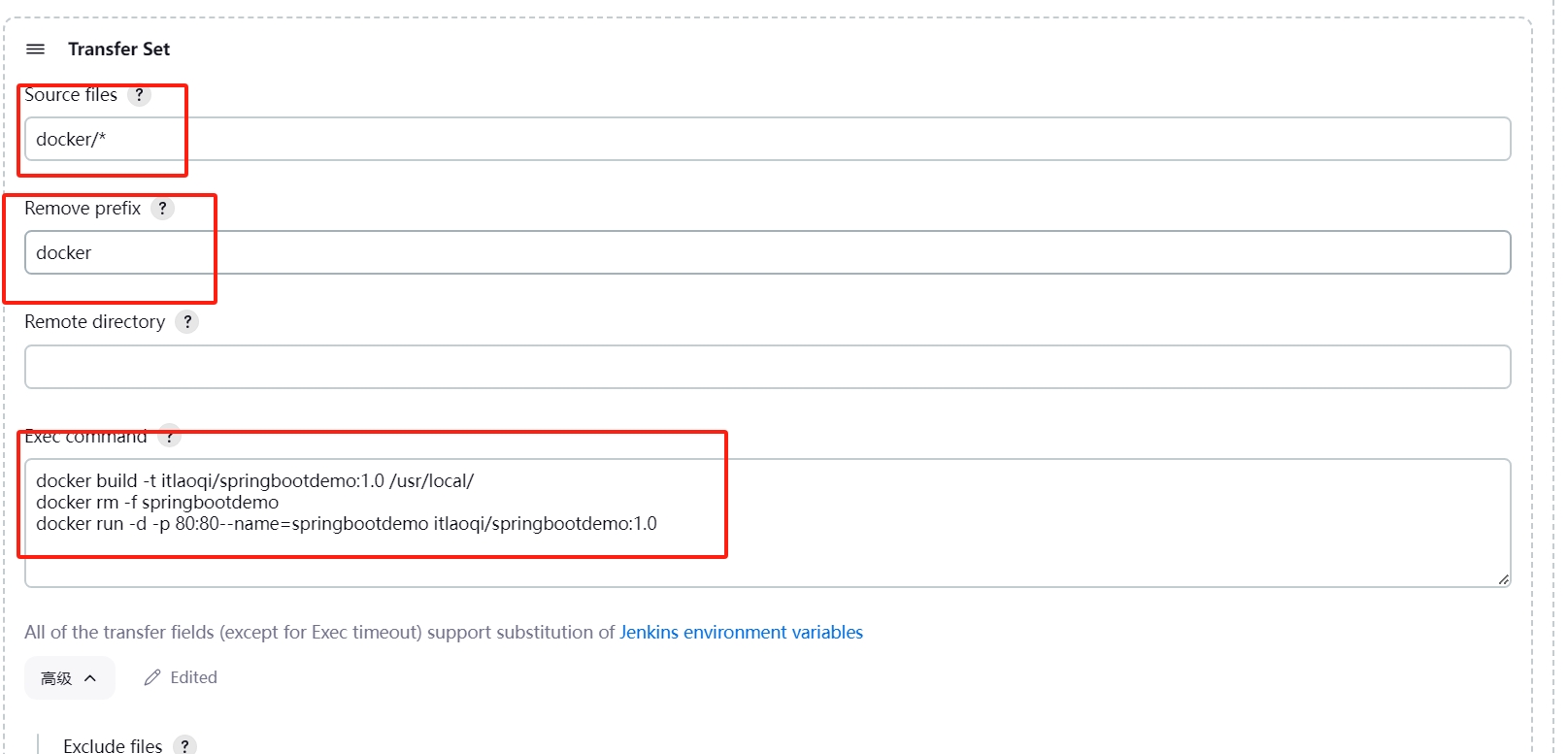

在231服务器下额外新增⼀个Transfer Set 传输Dockerfile,并执⾏构建镜像启动容器的代码

Source files:docker/*

Remove prefix:docker

Exec command:

docker build -t test/springbootdemo:1.0 /usr/local/

docker rm -f springbootdemo

docker run -d -p 80:8081 --name=springbootdemo test/springbootdemo:1.0

单独虚拟机安装部署Harbor镜像仓库(等价于dockerHub)

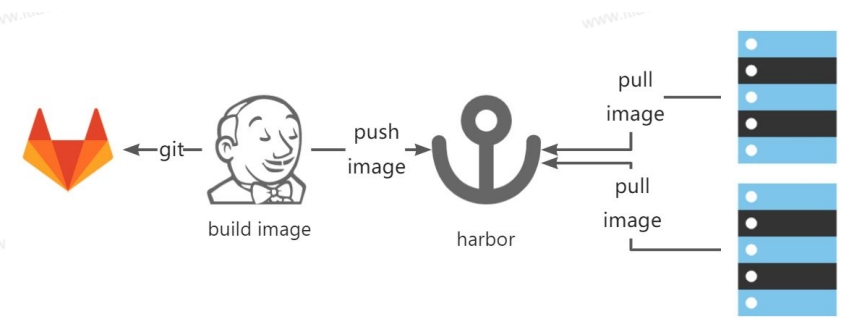

Jenkins构建镜像推送到Harbor,目标服务器从Harbor下载镜像

推荐2 CPU 4 GB 40 GB

必装Docker engine、Docker Compose、Openssl

安装Docker Compose,已安装,因为它在高版本是插件

下载离线harbor安装包

cd /usr/local

# wget下载

wget --no-check-certificate https://github.com/goharbor/harbor/releases/download/v1.10.14/harbor-offline-installer-v1.10.14.tgz

# 解压

tar xzvf harbor-offline-installer-v2.12.2.tgz

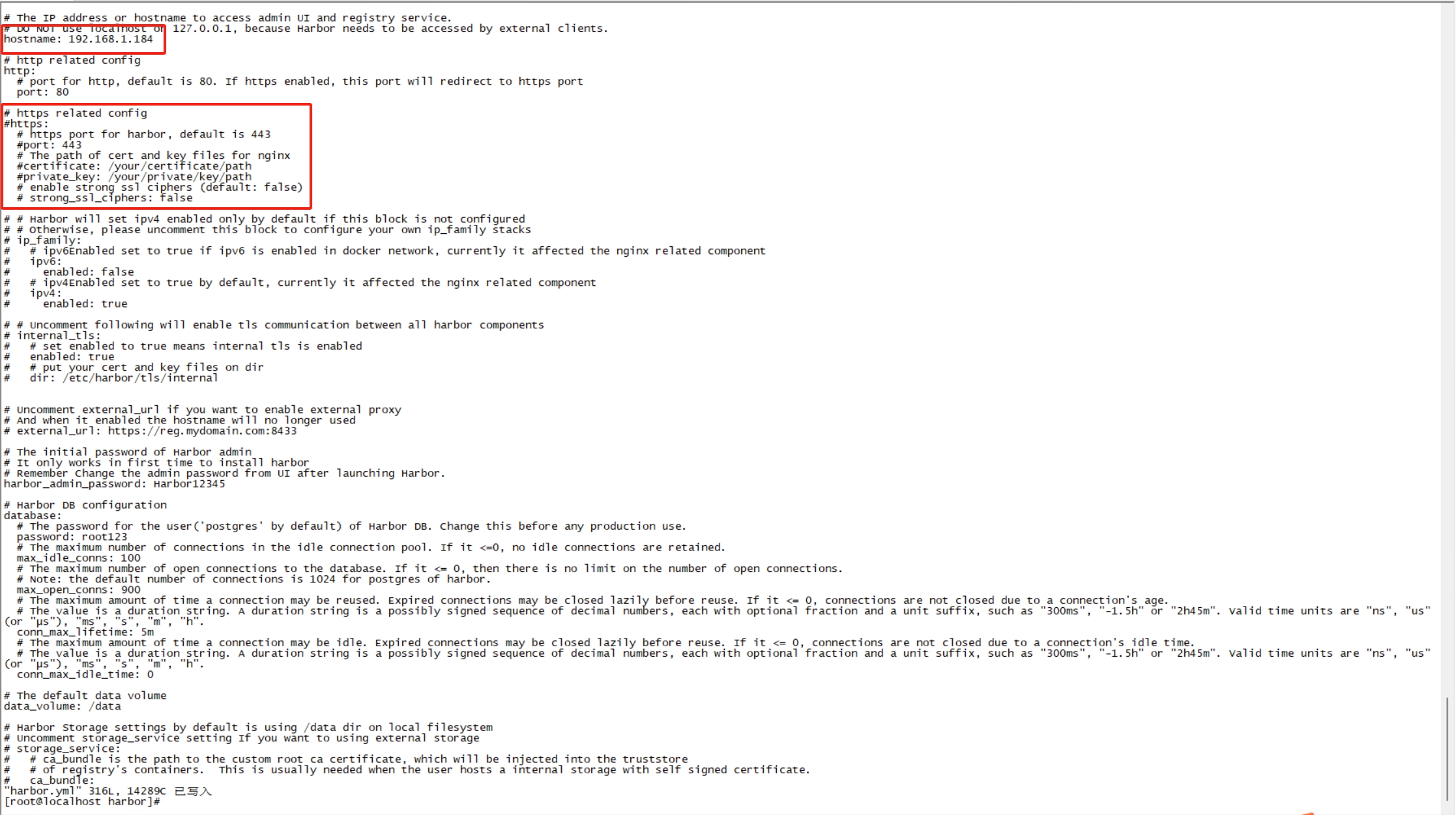

修改Habor核⼼配置⽂件harbor.yml

hostname:192.168.1.155

注释掉https.*

默认密码:Harbor12345

docker ps

4e26ad58f858 goharbor/nginx-photon:v2.12.2 "nginx -g 'daemon of…" 12 minutes ago Up 12 minutes (healthy) 0.0.0.0:80->8080/tcp, :::80->8080/tcp nginx

安装Harbor

./install

Harbor由⼗多个容器组合构成,必须使⽤docker-compose才可以款速安装

卸载docker compose down

访问:

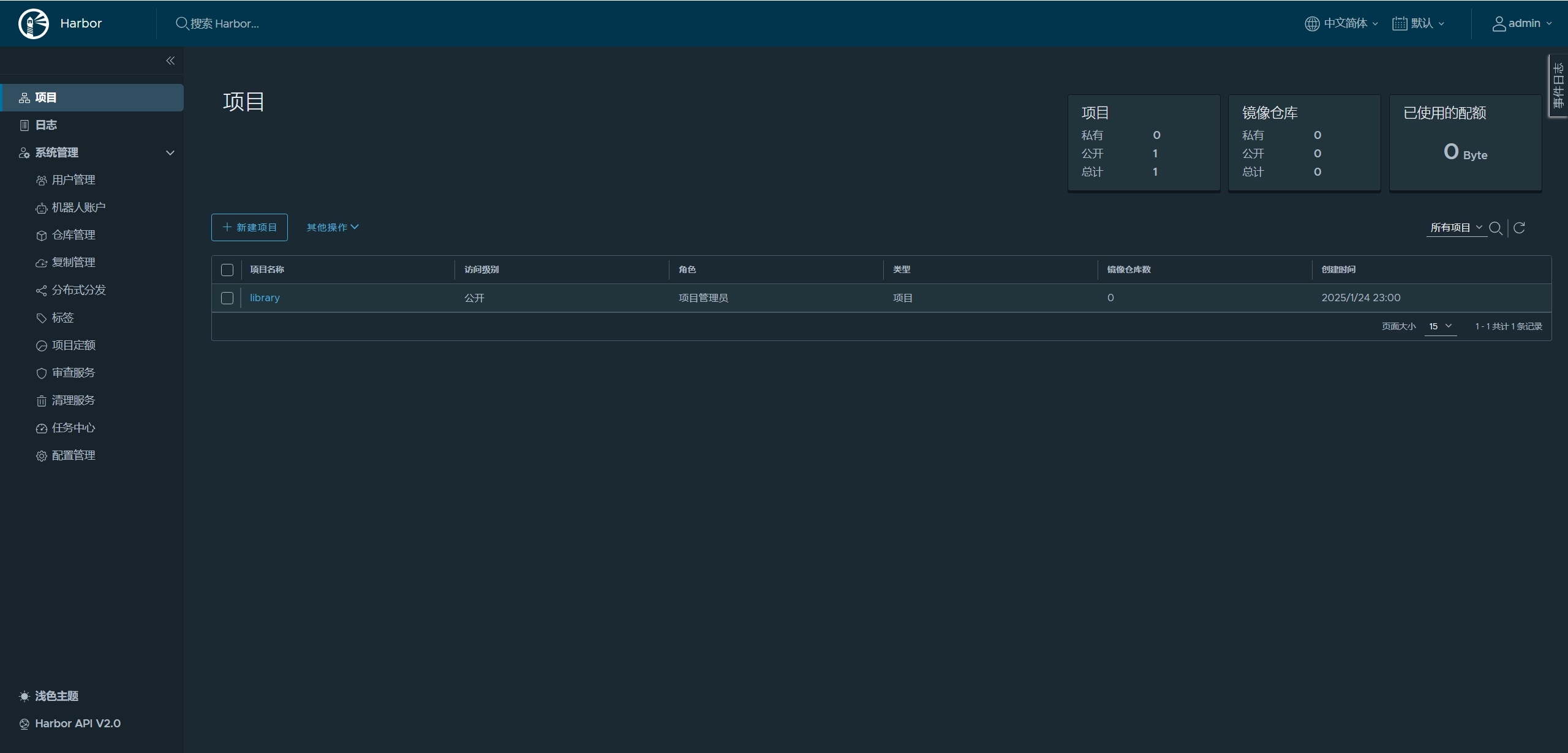

192.168.1.155:80

⽤户名:admin

密码:Harbor12345

Harbor新建镜像仓库

项⽬名称:public

访问级别:公开

在155Harbor服务器,修改daemon.json,增加harbor私有仓库地址,insecure-registries

cat > /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": [],

"insecure-registries": ["192.168.1.155:80"]

}

EOF

systemctl daemon-reload

systemctl restart docker

按书写规范修改springbootdemo镜像格式:Harbor IP:Port/项⽬名/镜像名:Tag

使⽤⽤户名、密码登录Harbor仓库并利⽤push命令推送本地镜像

docker images

docker tag 0e2577308988 192.168.1.155:80/public/springbootdemo:1.0

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:1.0

进⼊Harbor确认上传成功

在176⽬标服务器修改私服地址

cat > /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://fskvstob.mirror.aliyuncs.com"],

"insecure-registries": ["192.168.1.155:80"]

}

EOF

systemctl daemon-reload

systemctl restart docker

# 执⾏pull拉取镜像

docker pull 192.168.1.155:80/public/myproject:1.0

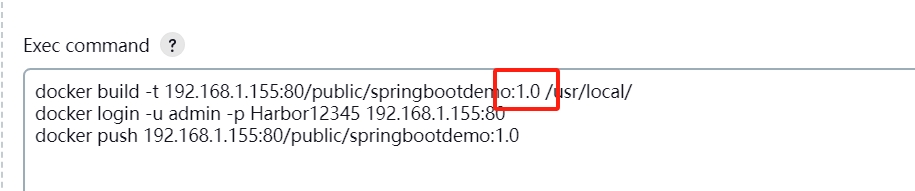

jenkins的构建后改成

docker build -t 192.168.1.155:80/public/springbootdemo:2.0 /usr/local/

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:2.0

Jenkins⾃动实现CI持续集成

确保155节点上登记了insecure-registries

在jenkins241系统设置中新增SSH Server:

name:Harbor-155

hostname:192.168.1.155

username:root

password:root

remote directory:/usr/local

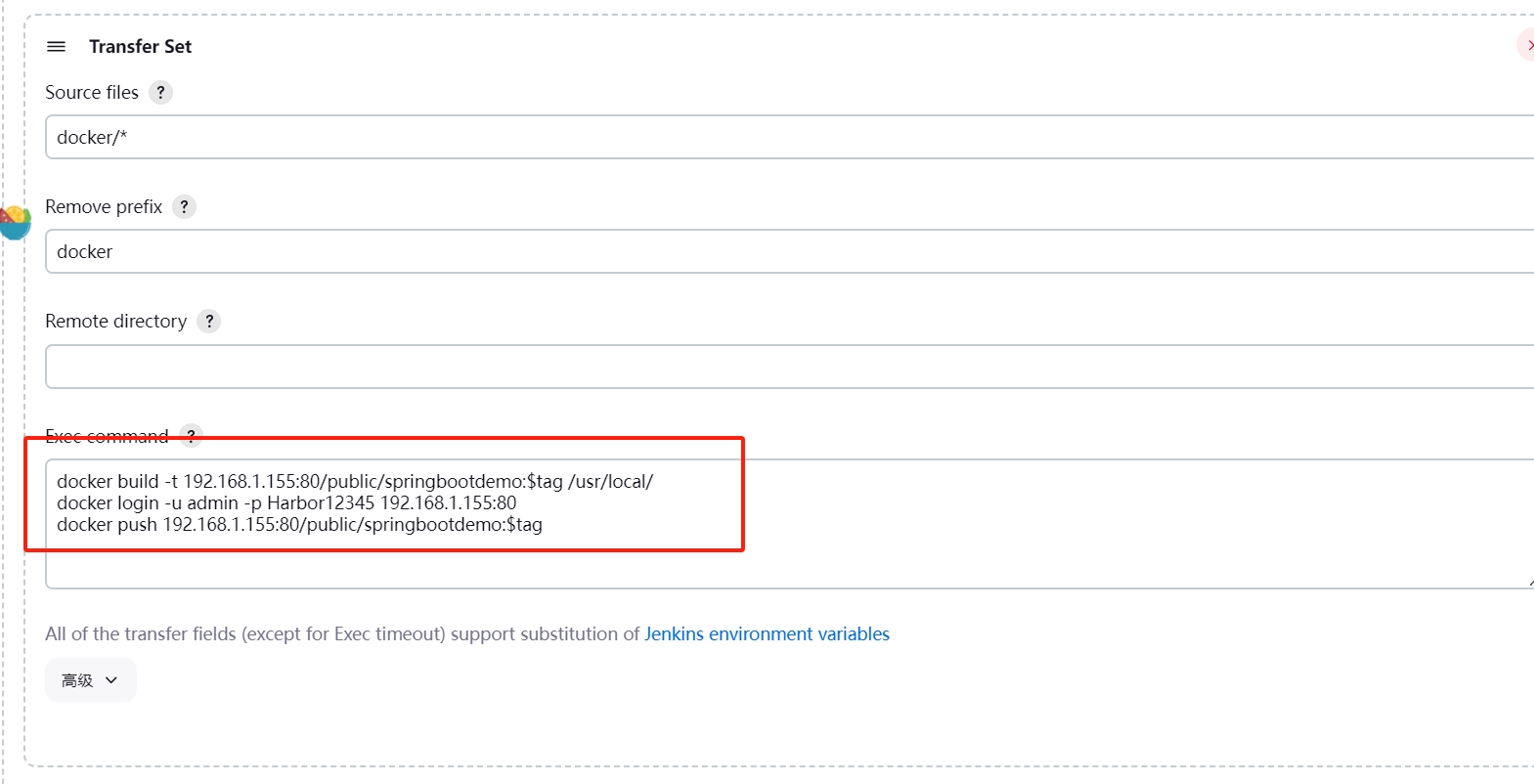

在原有构建后操作176之前,新增Harbor155节点操作

向155传输Jar⽂件、Dockerfile,并构建、推送容器

docker build -t 192.168.1.155:80/public/springbootdemo:1.0 /usr/local/

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:1.0

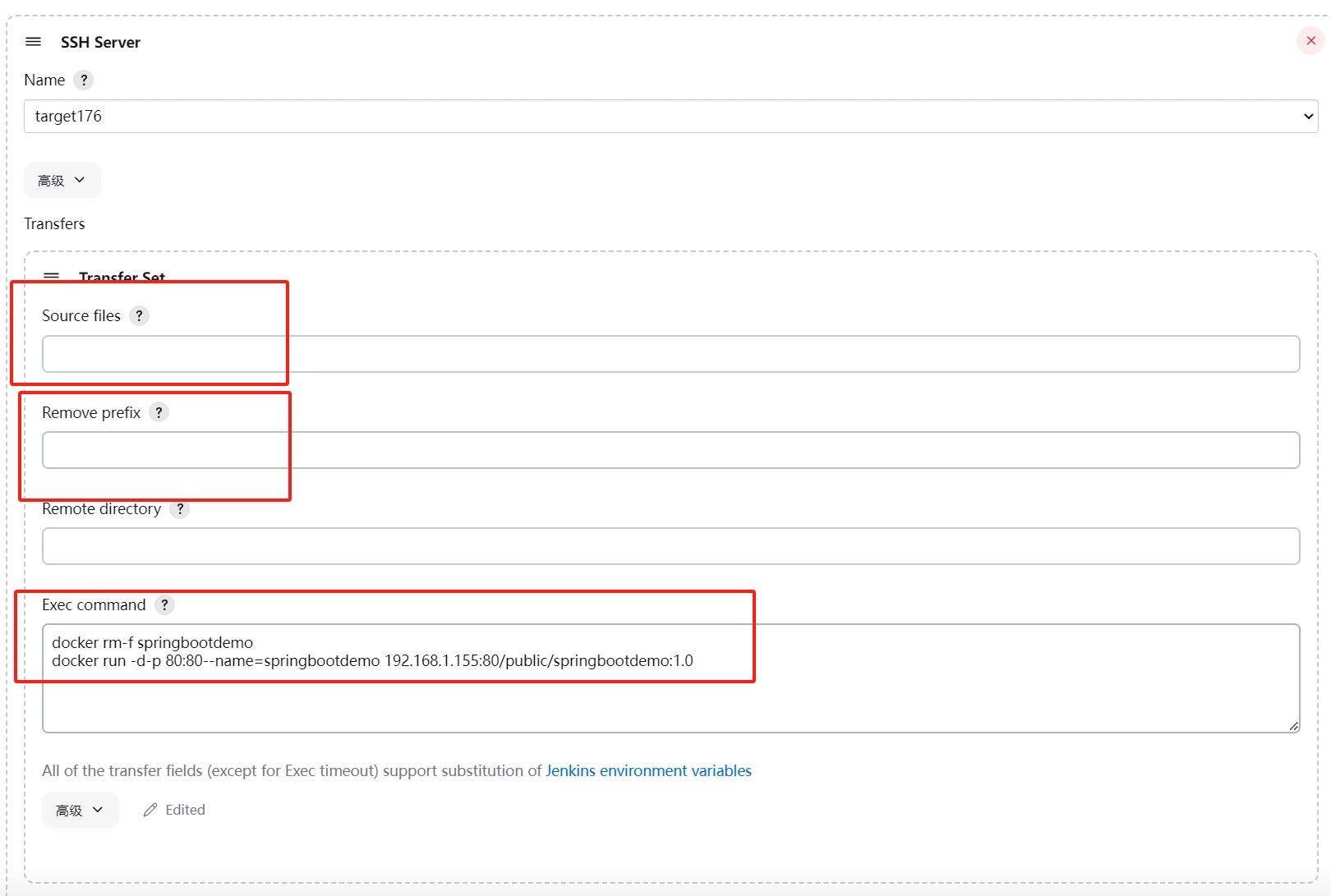

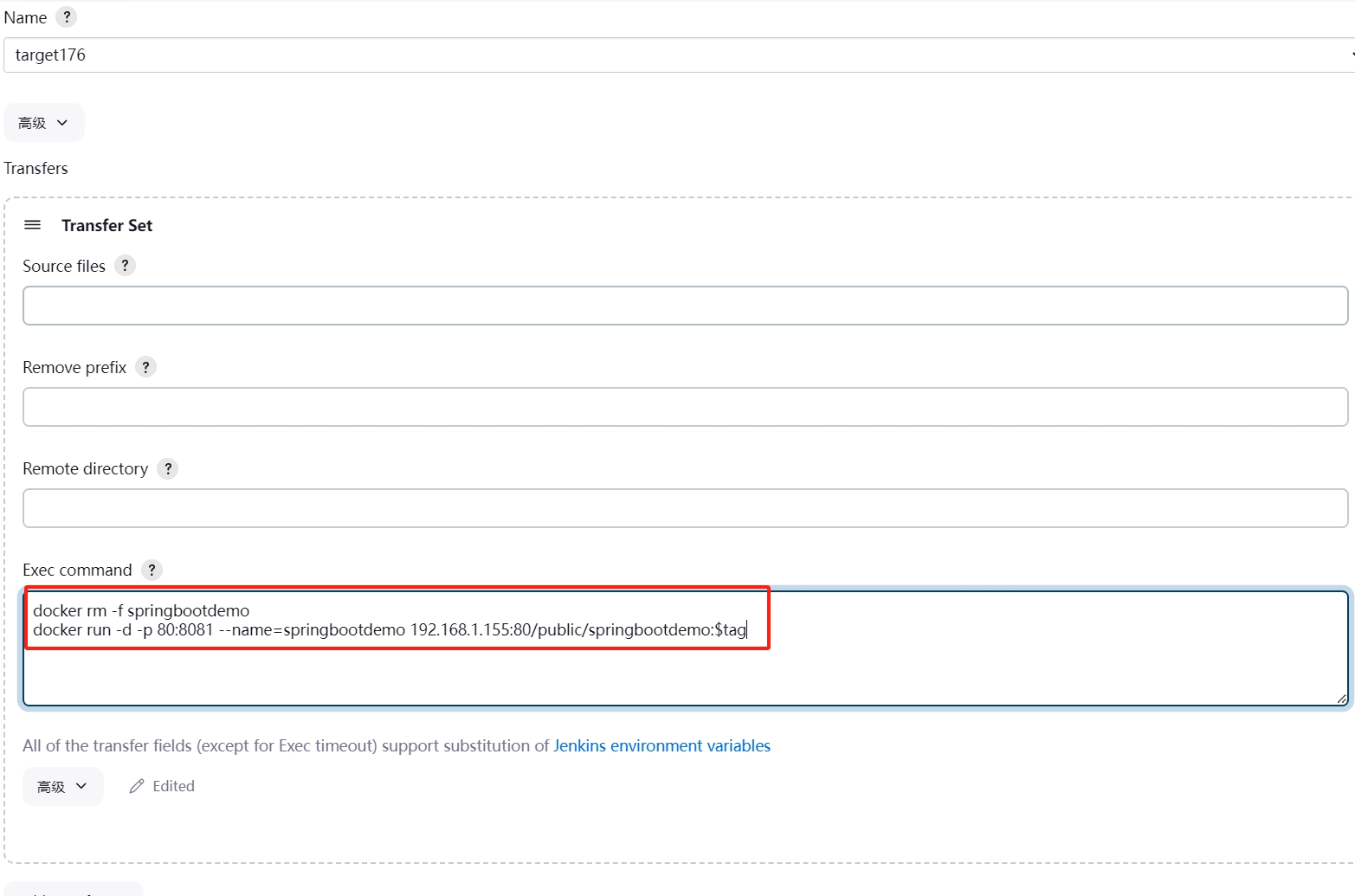

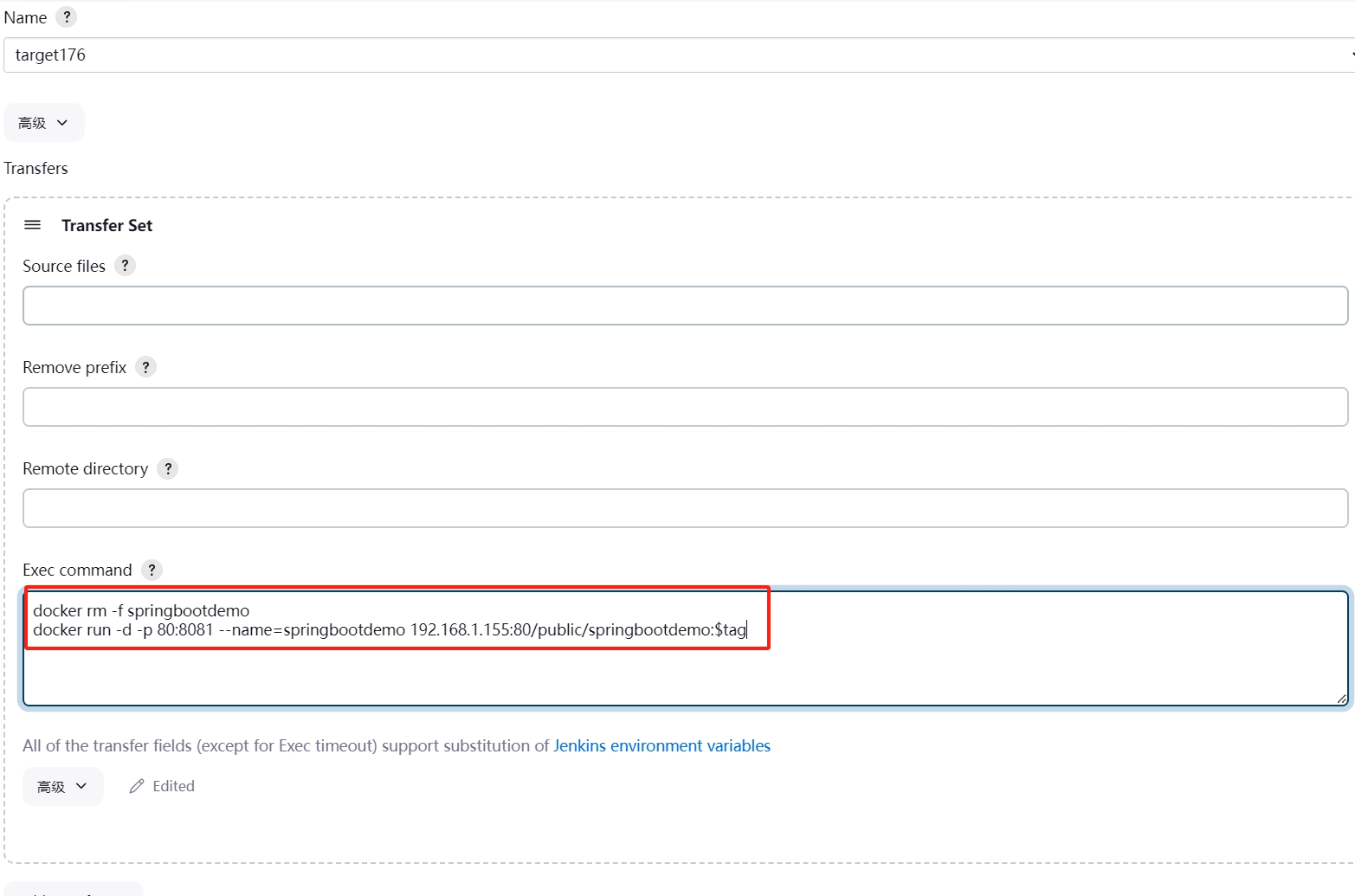

176目标改为只负责容器创建⼯作

docker rm -f springbootdemo

docker run -d -p 80:8081 --name=springbootdemo 192.168.1.155:80/public/springbootdemo:1.0

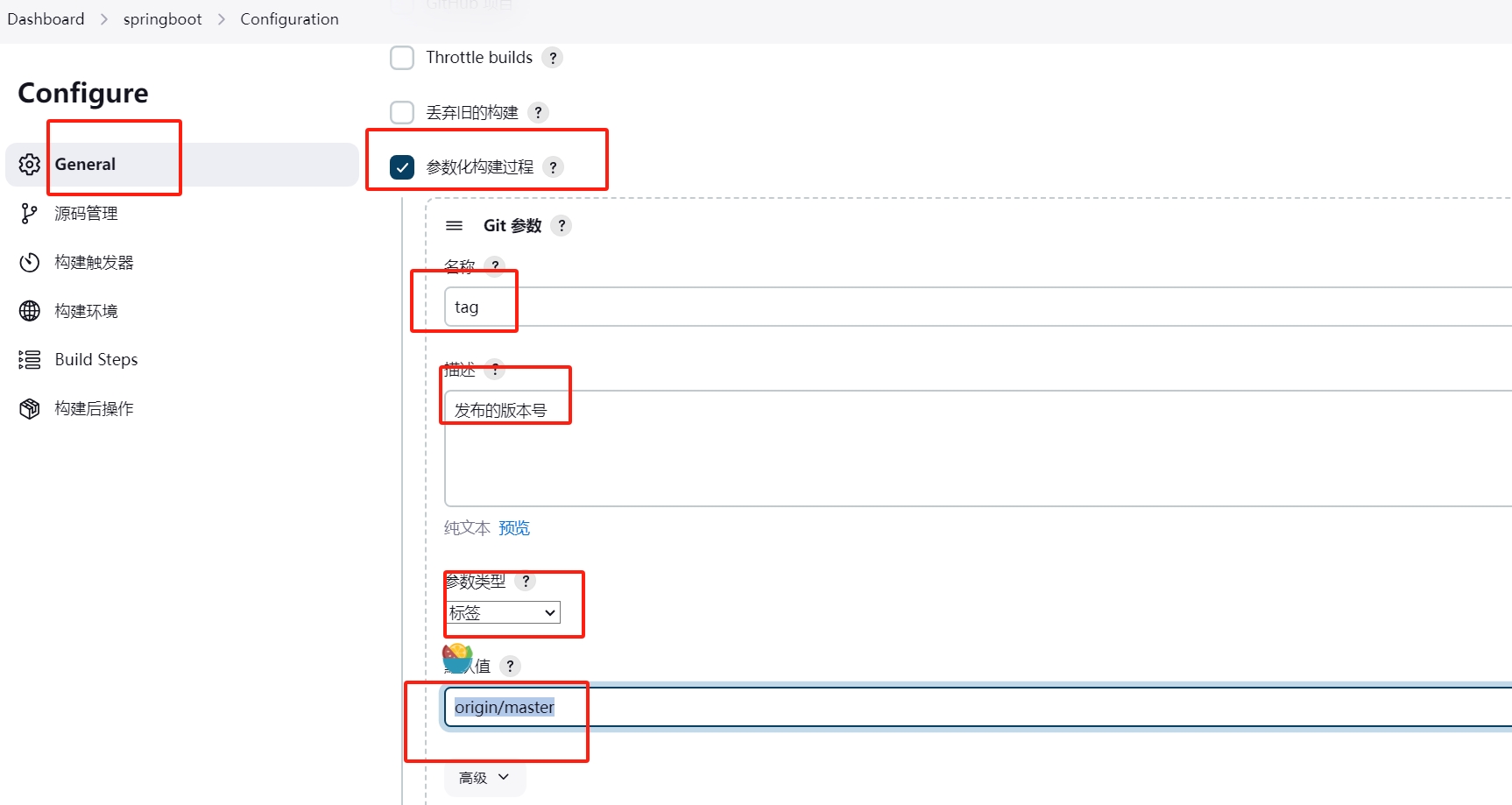

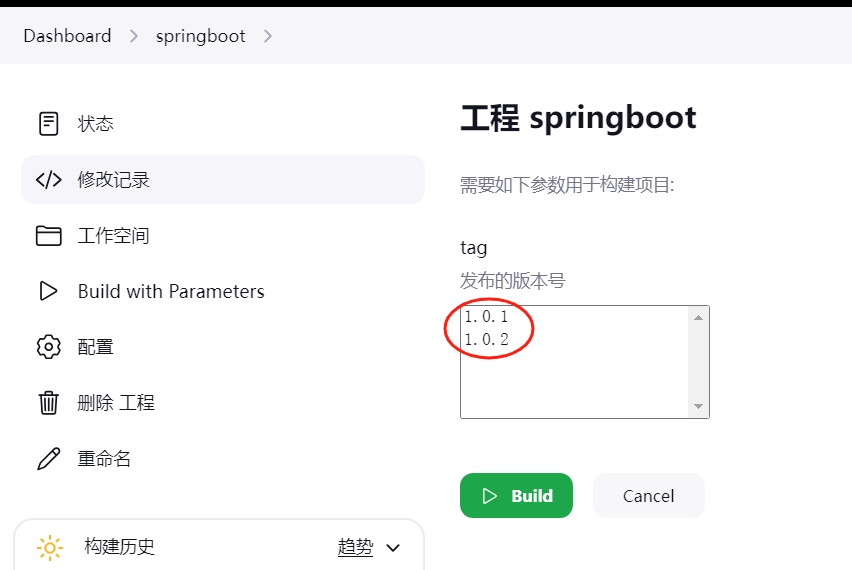

Jenkins参数化构建多版本发布

解决固定版本号问题

参数化构建过程->Git参数

编辑Git参数

名称:tag

描述:发布的版本号

默认值:origin/master

在构建部分原本的package前新增Shell,现⾏checkout指定的版本,

$tag引⽤选择的版本号

git checkout $tag

原本155 Harbor仓库Exec command,将所有1.0改为$tag进⾏引⽤

docker build -t 192.168.1.155:80/public/springbootdemo:$tag /usr/local/

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:$tag

原本176 ⽬标服务器的Exec command位置,将1.0改为$tag

docker rm -f springbootdemo

docker run -d -p 80:8081 --name=springbootdemo 192.168.1.155:80/public/springbootdemo:$tag

Git新建Tag 1.0.0

此时会出现Build with Parameters,请选择1.0.1完成构建过程

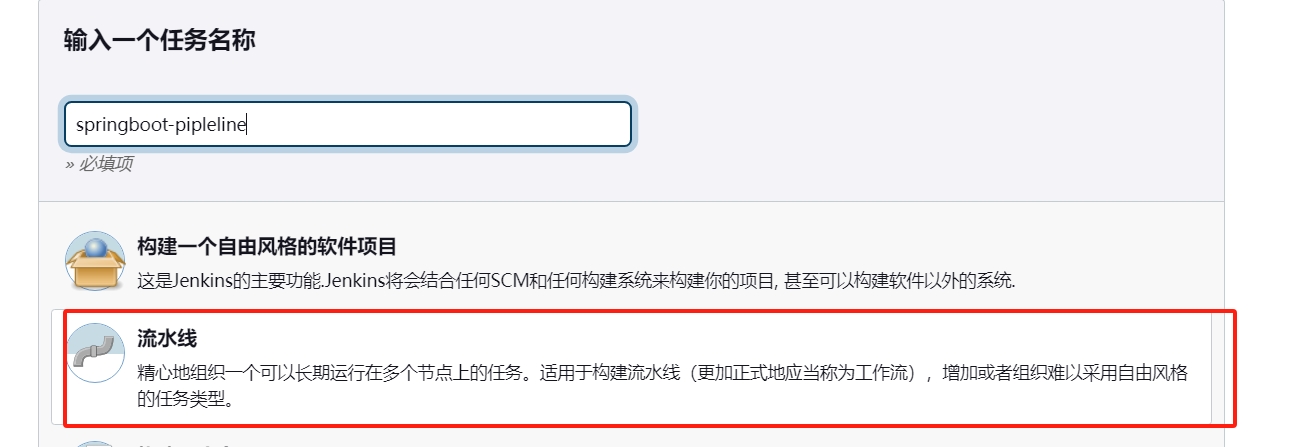

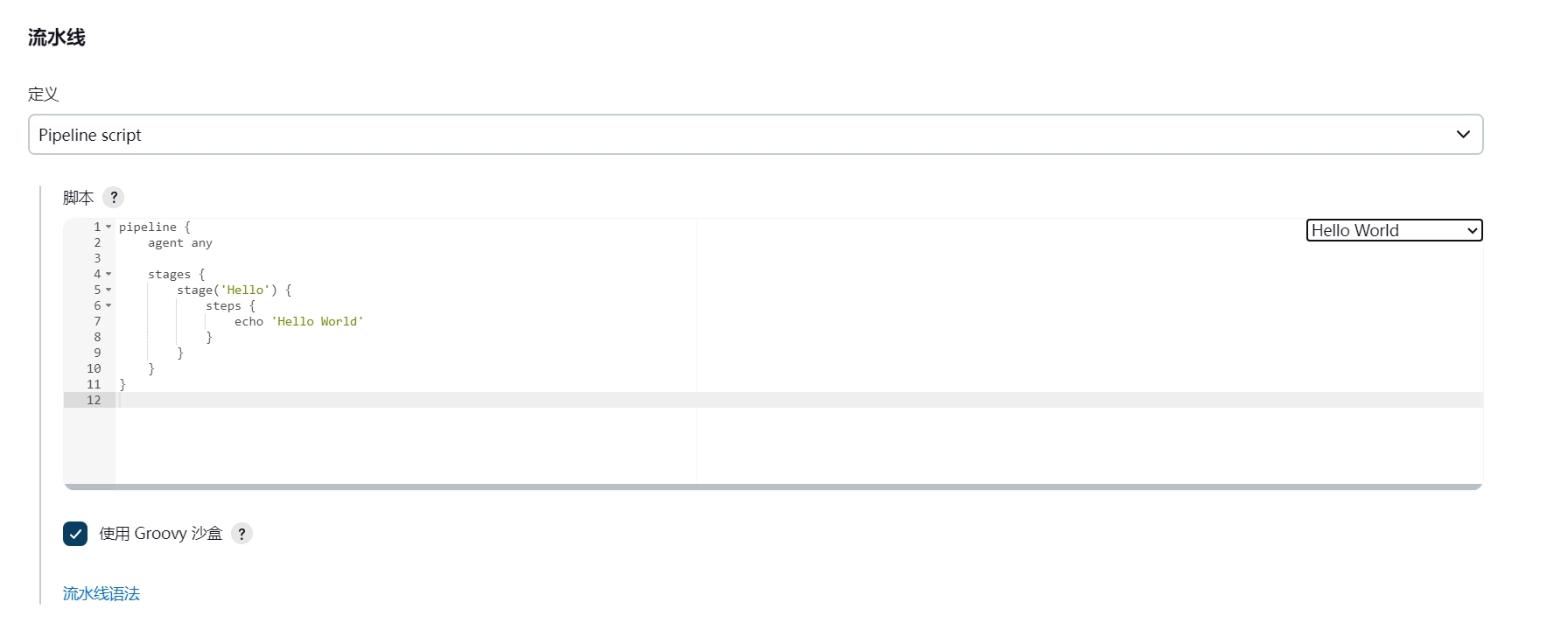

Jenkins Pipeline流⽔线作业

Pipeline流⽔线提供了脚本化,按阶段步骤处理

配置过程

参照之前选择参数化构建

选择实例脚本Hello World

通过上面的流水线语法,利⽤脚本⽚段⽣成⼯具,⾃动⽣成各种Stage脚本,指定分⽀:$tag ,然后⽣成脚本即可

注意第一次可能只有master的tag,构建完一次后才有版本的tag

Pipeline script

pipeline {

agent any

stages {

stage('Pull SourceCode') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: '$tag']],

extensions: [],

userRemoteConfigs: [[url: 'http://192.168.1.240/root/springbootdemo']]

])

}

}

stage('Maven Build') {

steps {

sh '/usr/local/maven/bin/mvn package'

}

}

stage('Publish Harbor Image') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'harbor-155',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'target',

sourceFiles: 'target/*.jar'

),

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''docker build -t 192.168.1.155:80/public/springbootdemo:$tag /usr/local/

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:$tag''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'docker',

sourceFiles: 'docker/*'

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

stage('Run Container') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'target176',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''docker rm -f springbootdemo

docker run -d -p 80:8081 --name=springbootdemo 192.168.1.155:80/public/springbootdemo:$tag''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: '',

sourceFiles: ''

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

}

}

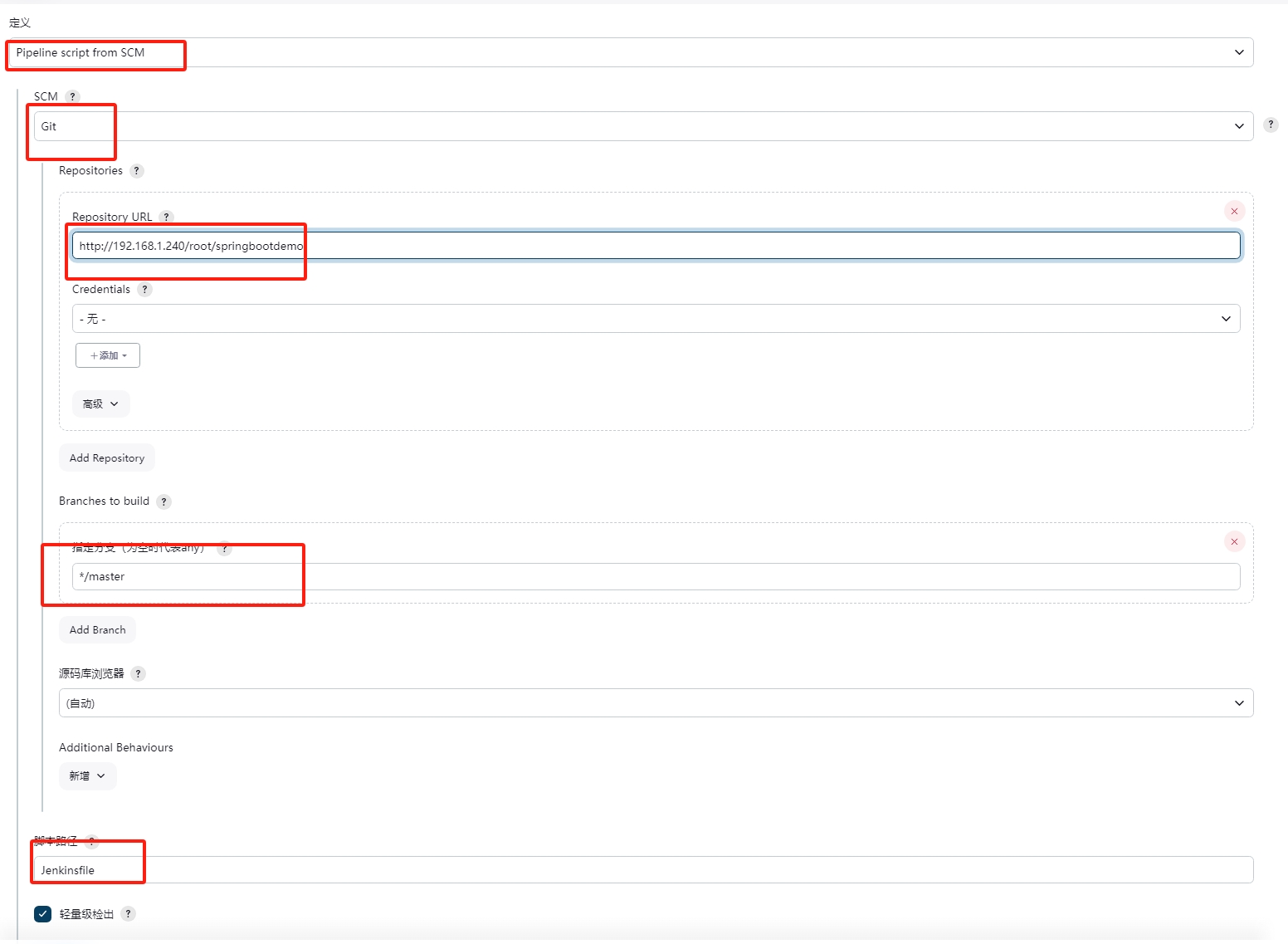

Gitlab托管Jenkinsfile

在项目目录下新建文件Jenkinsfile,将上面的Pipeline script复制进去,然后上传⾄master分⽀即可。

流⽔线部分选择Pipeline script from SCM

Repository URL: http://192.168.1.240/root/springbootdemo

指定分⽀:*/master

脚本路径:Jenkinsfile

Jenkins Pipeline会先从Gitlab下载Jekinsfile,在读取脚本执⾏,通过SCM构建会额外增加⼀个Checkout SCM的Stage,说明拉取

为什么要引⼊Kubernetes

部署和管理成千上万组件,并⽀持基础设施的全球性伸缩,

用于部署和管理容器化的应⽤。依赖于Linux容器的特性来运⾏异构应⽤,⽆须知道应⽤的内部详情,不需要⼿动将应⽤部署到每台机器。因为这些应⽤运⾏在容器⾥,与同⼀台服务器上的其他应⽤隔离

etcd是⾼可⽤的键值对的分布式安全存储系统,⽤于持久化存储集群中所有的资源对象,例如集群中的Node、Service、Pod的状态和元数据,以及配置数据等。

API Server,kube-API Server。承担API的⽹关职责,⽤户请求及其他系统组件与集群交互的唯⼀⼊⼝。

控制器是Kubernetes集群的⾃动化管理控制中⼼,有Pod管理的(Replication 控制器、Deployment 控制器等)、有⽹络管理的(Endpoints 控制器、Service 控制器等)、有存储相关的(Attachdetach 控制器等)

集群中的调度器负责Pod在集群节点中的调度分配。能够提⾼每台机器的资源利⽤率,将压⼒分摊到各个机器上

kubelet是运⾏在每个节点上的负责启动容器的重要的守护进程。在启动加载配置参数,向API Server 处创建⼀个Node 对象来注册⾃身的节点信息,例如操作系统、Kernel 版本、IP地址、总容量(Capacity)和可供分配的容量(Allocatable Capacity)等

kube-proxy ,也是⼀个“控制器”。从API Server 监听Service和Endpoint对象的变化,并根据Endpoint 对象的信息设置Service 到后端Pod的路由,维护⽹络规则,执⾏TCP、UDP和SCTP 流转发。

容器运⾏时是真正删除和管理容器的组件。容器运⾏时可以分为⾼层运⾏时和底层运⾏时。⾼层运⾏时主要包括Docker、Containerd和Cri-o,底层运⾏时包含运⾏时runc、kata 及gVisor。

通⽤容器⽹络接⼝CNI(Container Network Interface),专⻔⽤于设置和删除容器的⽹络连通性。容器运⾏时通过CNI调⽤⽹络插件来完成容器的⽹络设置。

Pod作为承载容器化应⽤的基本调度和运⾏单元。是⼀个或者多个容器镜像的组合。当应⽤启动以后,每⼀个容器镜像对应⼀组进程,⽽同⼀个Pod的所有容器中的进程默认公⽤同⼀⽹络Namespace,并且共⽤同⼀⽹络标识

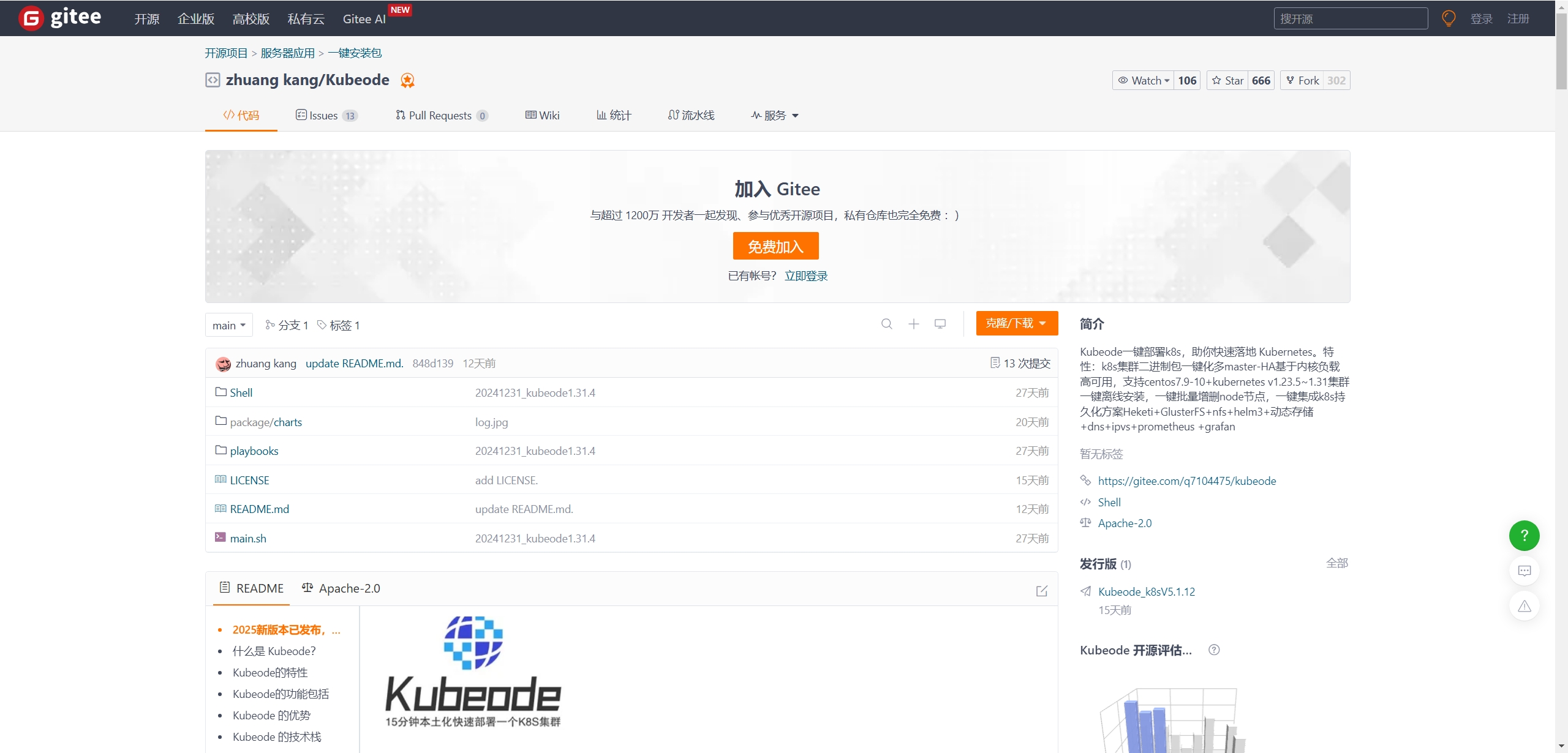

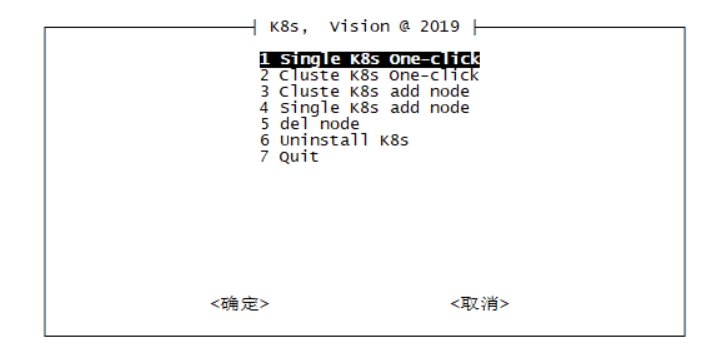

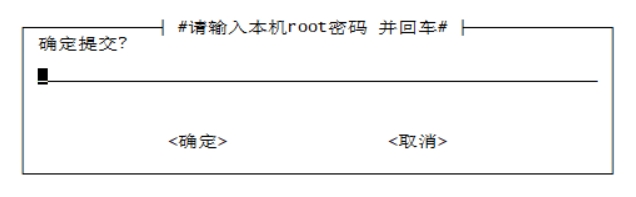

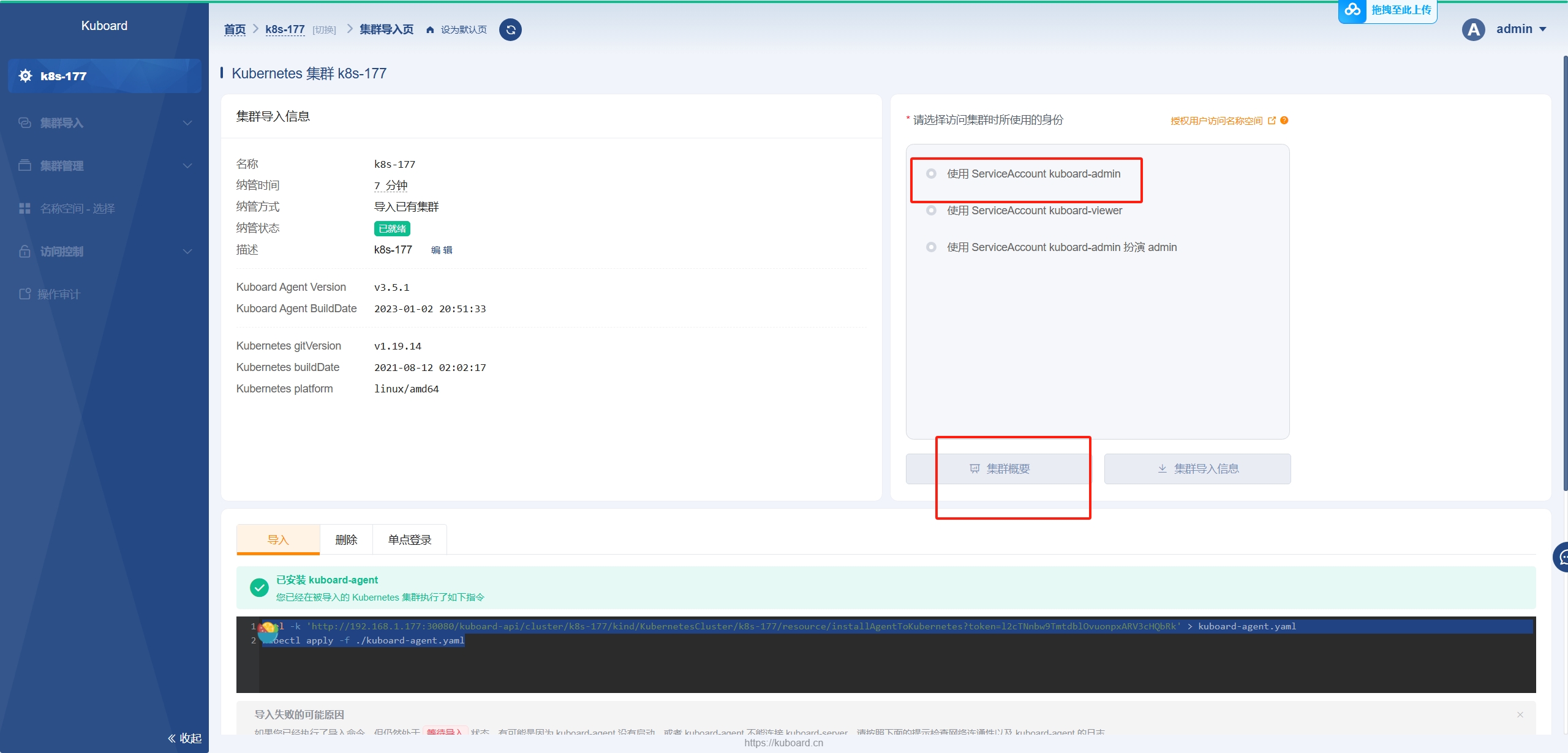

177快速部署Kubernetes

利⽤Kubeode快速部署单节点K8S

下载k8s-2022-04-24.tar版本

准备服务器:4核CPU/8G内存/CentoS7.x

将压缩包上传⾄/usr/local⽬录解压缩,并执⾏安装

tar xf k8s-2022-04-24.tar

cd k8s-2022-04-24

bash install.sh

选择1.单机部署

输⼊177服务器root密码:root

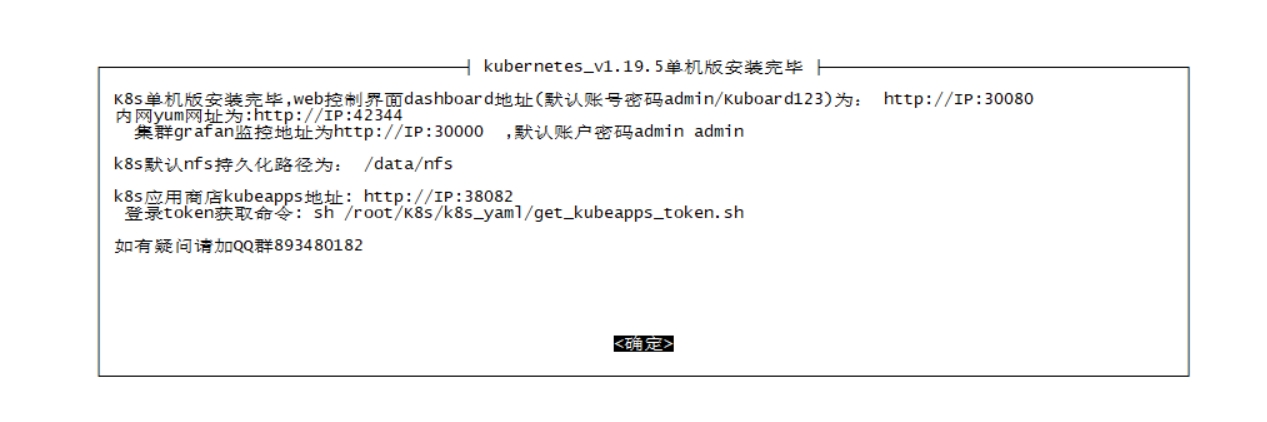

安装成功,⽣成各种⽤户名密码

访问http://192.168.1.177:30080

⽤户名:admin

密码:Kuboard123

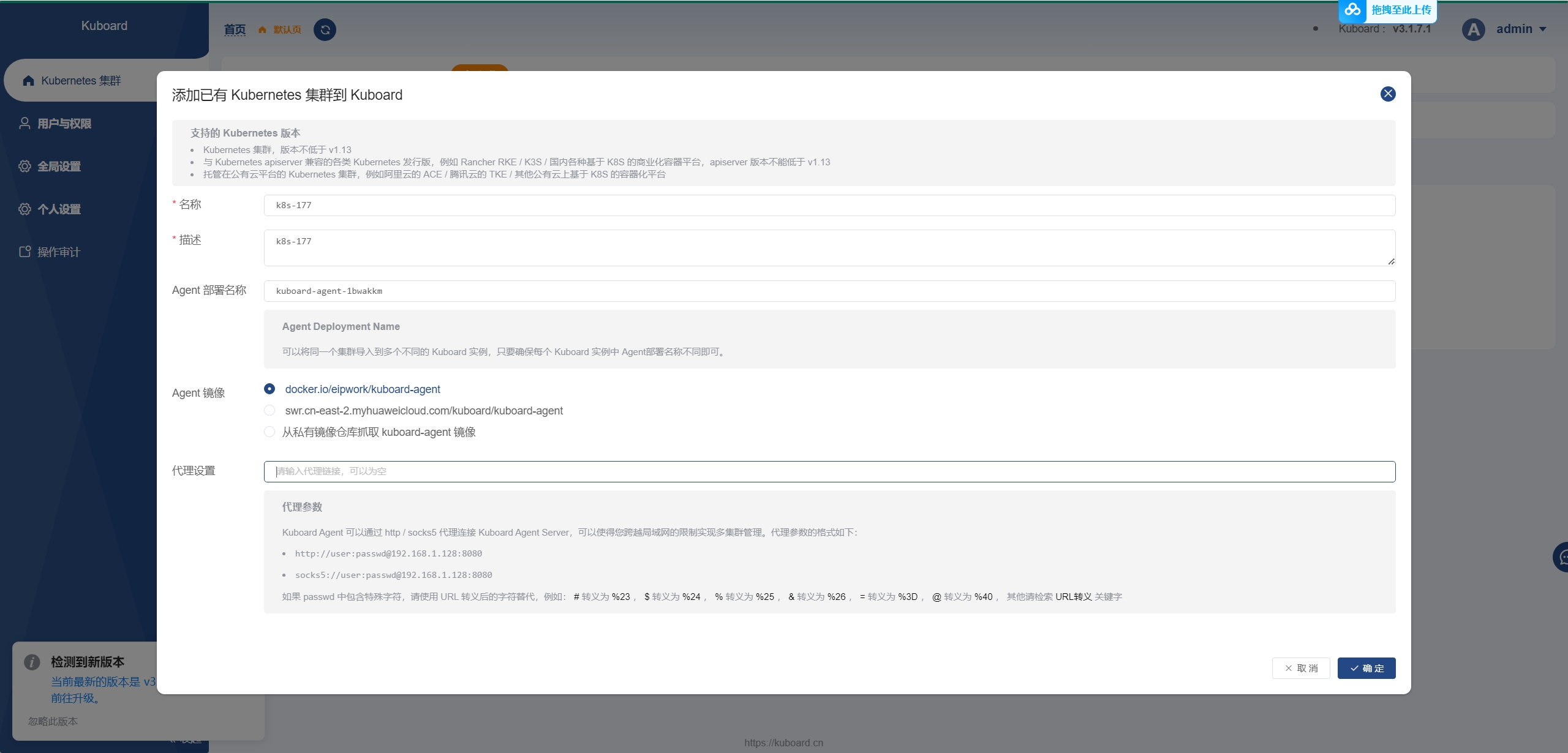

添加集群

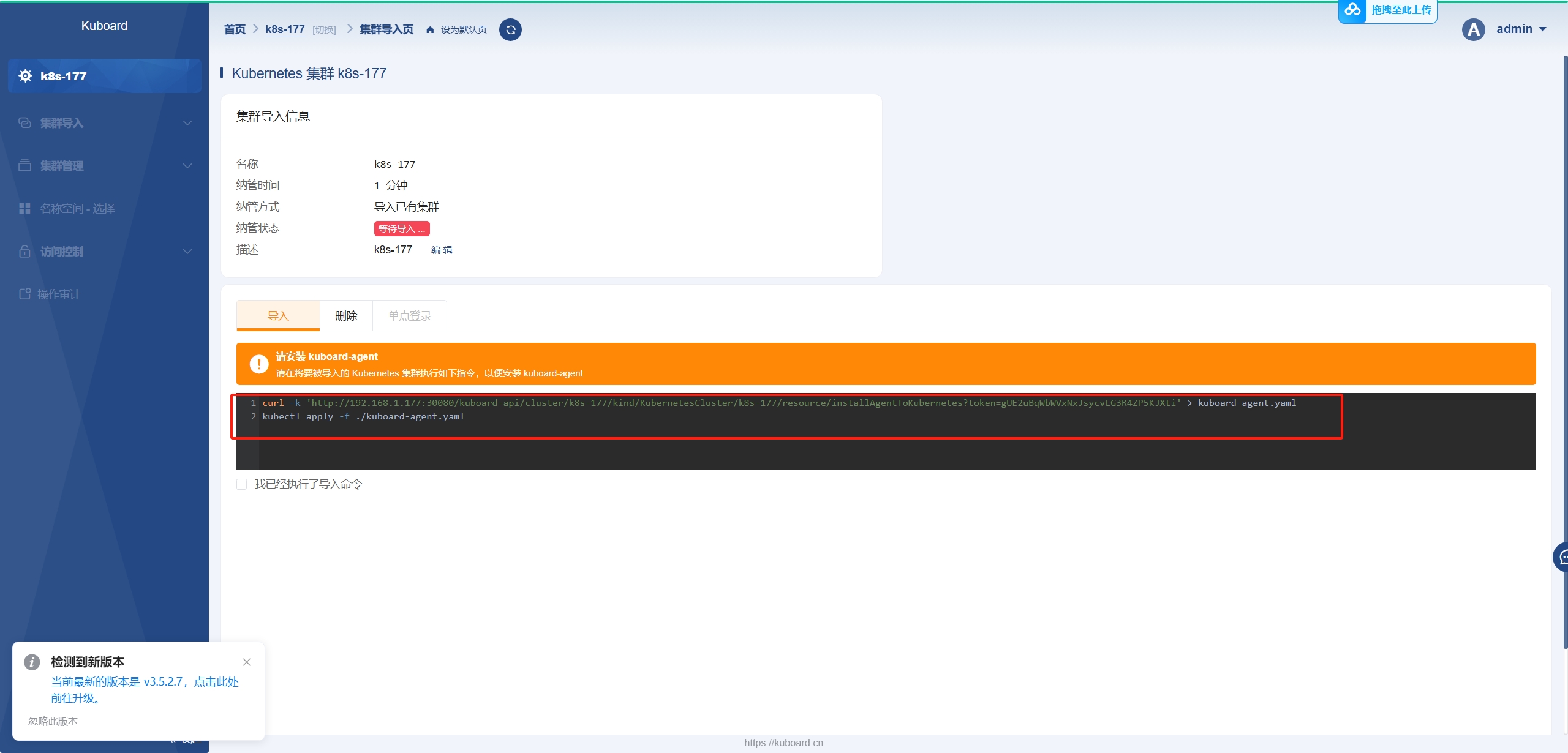

复制命令在177安装Agent

curl -k 'http://192.168.1.177:30080/kuboard-api/cluster/k8s-177/kind/KubernetesCluster/k8s-177/resource/installAgentToKubernetes?token=l2cTNnbw9TmtdblOvuonpxARV3cHQbRk' > kuboard-agent.yaml

kubectl apply -f ./kuboard-agent.yaml

# 检查agent

kubectl get pods -n kuboard -o wide -l "k8s.kuboard.cn/name in (kuboard-agent-1bwakkm, kuboard-agent-1bwakkm-2)"

# 检查tcp连通性

nc -vz 192.168.1.177 30081

# 检查日志

kubectl logs -f -n kuboard -l "k8s.kuboard.cn/name in (kuboard-agent-1bwakkm, kuboard-agent-1bwakkm-2)"

集群导⼊完毕,选择kuboard-admin管理集群

访问Grafana监控仪表盘

http://192.168.1.177:30000

⽤户名:admin

密码:admin

为docker-daemon.json增加insecure-registries私有仓库

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://dockerhub.azk8s.cn","https://hub-mirror.c.163.com"],

"insecure-registries": ["192.168.1.155:80"],

"exec-opts": ["native.cgroupdriver=cgroupfs"],

"log-driver": "json-file",

"log-opts": {"max-size": "10m","max-file":"10"}

}

systemctl daemon-reload

systemctl restart docker

# 检查能不能用

docker run -d -p 80:8081 --name=springbootdemo 192.168.1.155:80/public/springbootdemo:1.0

# 查看

docker ps | grep springbootdemo

# 关闭

docker stop springbootdemo

docker rm springbootdemo

创建KS部署(Deployment)脚本

deployment控制器通过管理replicaset来间接管理pod

采⽤脚本⽅式

kubectl get deployment

kubectl delete deployment springbootdemo

mkdir -p /etc/k8s

cat > springbootdemo-deployment.yml <<-'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: springbootdemo

spec:

replicas: 2

selector:

matchLabels:

app: springbootdemo

template:

metadata:

labels:

app: springbootdemo

spec:

containers:

- name: springbootdemo

image: 192.168.1.155:80/public/springbootdemo:1.0.4

ports:

- containerPort: 8081

EOF

kubectl apply -f springbootdemo-deployment.yml

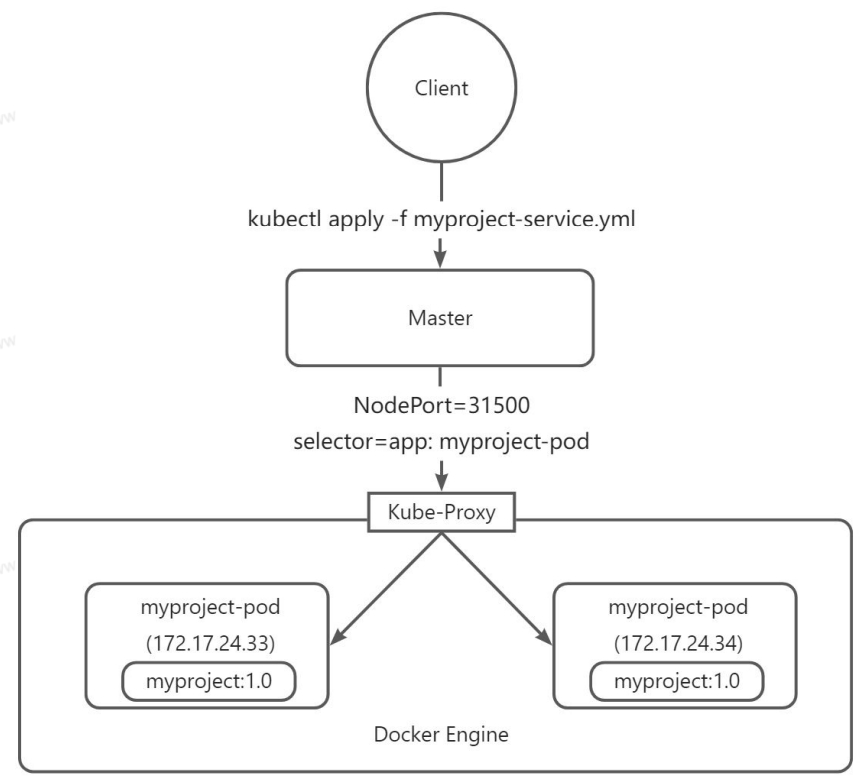

创建KS服务(Service)脚本

Pod⽹络插件的实现⽅式是将PodIP 作为私有IP地址,Pod 与Pod之间的通信是在⼀个私有⽹络中,因此要将Pod 中运⾏的服务发布出去需要⼀个服务发布机制。

为default命名空间创建服务脚本

⽤命令创建

mkdir -p /etc/k8s

cd /etc/k8s

kubectl delete -f springbootdemo-service.yml

cat > springbootdemo-service.yml <<-'EOF'

apiVersion: v1

kind: Service

metadata:

name: springbootdemo-service

spec:

type: NodePort

ports:

- port: 80

targetPort: 8081

nodePort: 31005

name: springbootdemo-port

selector:

app: springbootdemo

EOF

kubectl apply -f springbootdemo-service.yml

minikube service springbootdemo-service --url

Jenkins流⽔线驱动KS持续部署

在项目根目录(src同级目录)中增加deployment.yml,将原有的Deployment与Service两个脚本合并在⼀起

将原本写死的版本号更换为占位符<TAG>

apiVersion: apps/v1

kind: Deployment

metadata:

name: springbootdemo-deployment

spec:

replicas: 2

selector:

matchLabels:

app: springbootdemo-pod

template:

metadata:

labels:

app: springbootdemo-pod

spec:

containers:

- name: springbootdemo

image: 192.168.1.155:80/public/springbootdemo:<TAG>

ports:

- containerPort: 8081

---

apiVersion: v1

kind: Service

metadata:

name: springbootdemo-service

spec:

type: NodePort

ports:

- port: 80

targetPort: 8081

nodePort: 31005

name: springbootdemo-port

selector:

app: springbootdemo-pod

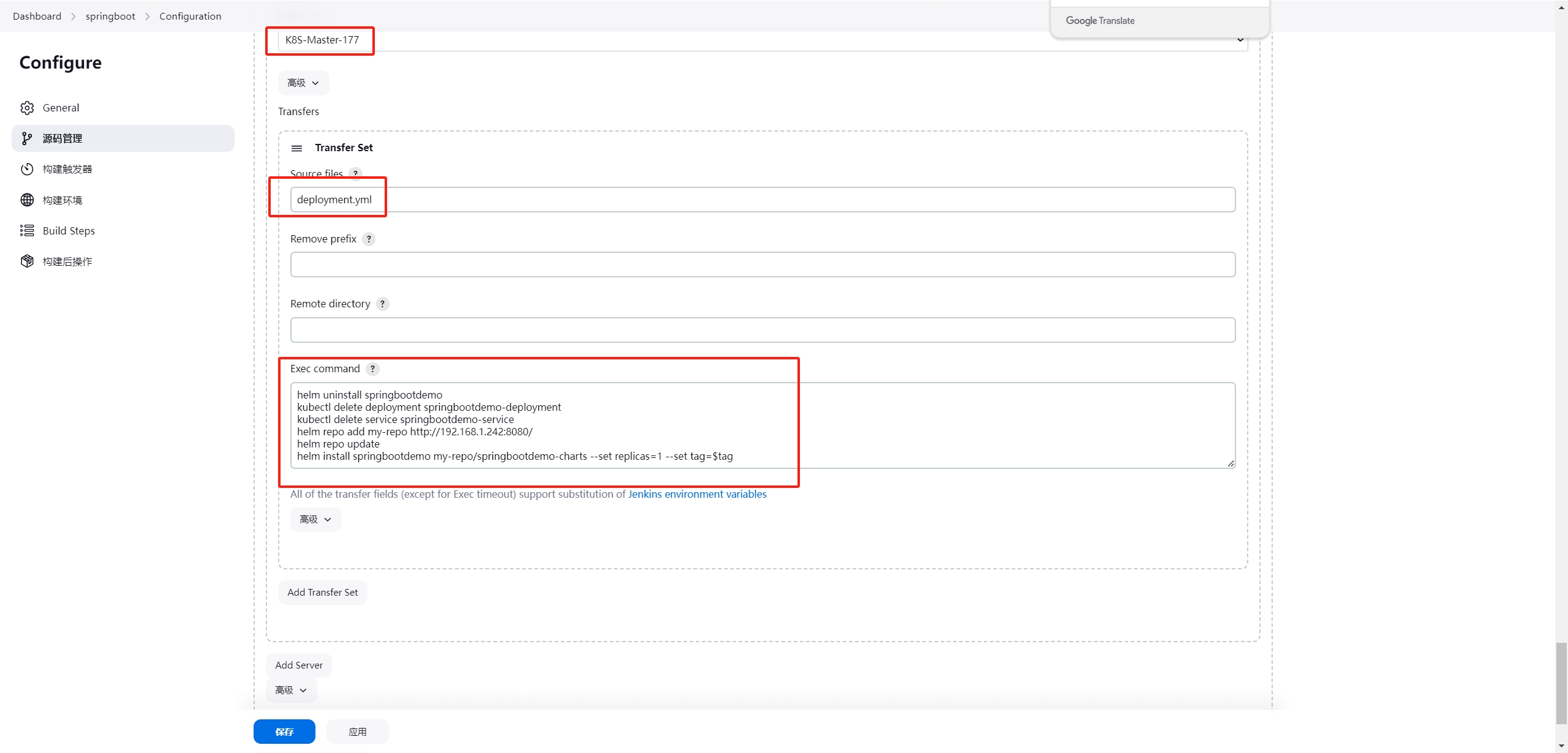

回到Jenkins192.168.1.241:8080系统设置配置177服务器(参考之前)

Name:K8S-Master-177

Hostname:192.168.1.177

Username:root

Password:root

Remote Directory:/usr/local

回到任务配置,新增SSH Service,向177发布deployment.yml

选择K8S-Master-233

Source files:deployment.yml

Exec command:

sed -i 's/<TAG>/$tag/' /usr/local/deployment.yml

kubectl delete deployment springbootdemo-deployment

kubectl delete service springbootdemo-service

kubectl apply -f /usr/local/deployment.yml

作⽤是

根据选择版本替换掉deployment.yml中<TAG>占位符

删除遗留的部署

删除遗留的服务

重新应⽤新的deployment.yml脚本

完整Pipeline Script

pipeline {

agent any

stages {

stage('Pull SourceCode') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: '$tag']],

extensions: [],

userRemoteConfigs: [[url: 'http://192.168.1.240/root/springbootdemo']]

])

}

}

stage('Maven Build') {

steps {

sh '/usr/local/maven/bin/mvn package'

}

}

stage('Publish Harbor Image') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'harbor-155',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'target',

sourceFiles: 'target/*.jar'

),

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''docker build -t 192.168.1.155:80/public/springbootdemo:$tag /usr/local/

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:$tag''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'docker',

sourceFiles: 'docker/*'

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

stage('Run Container') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'K8S-Master-177',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''sed -i \'s/<TAG>/$tag/\' /usr/local/deployment.yml

kubectl delete deployment springbootdemo-deployment

kubectl delete service springbootdemo-service

kubectl apply -f /usr/local/deployment.yml''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: '',

sourceFiles: 'deployment.yml'

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

}

}

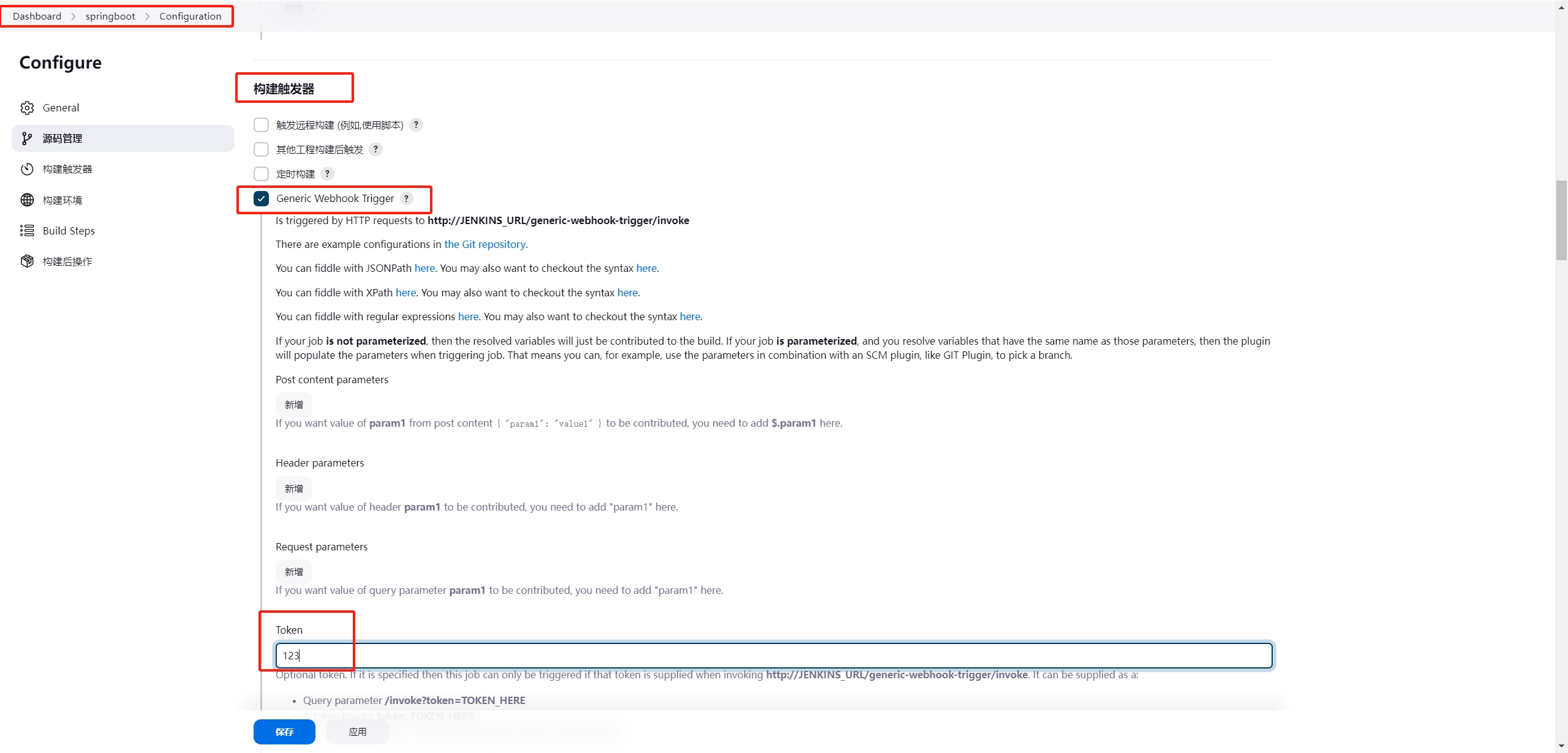

Gitlab Webhook⾃动版本发布

通过Gitlab 在发布新Tag时⾃动进⾏构建

Jenkins192.168.1.241:8080安装Generic Webhook Trigger插件

在构建触发器位置会出现Generic Webhook Trigger,勾选后⽣成URL可被Gitlab调⽤

输⼊项 Token:123

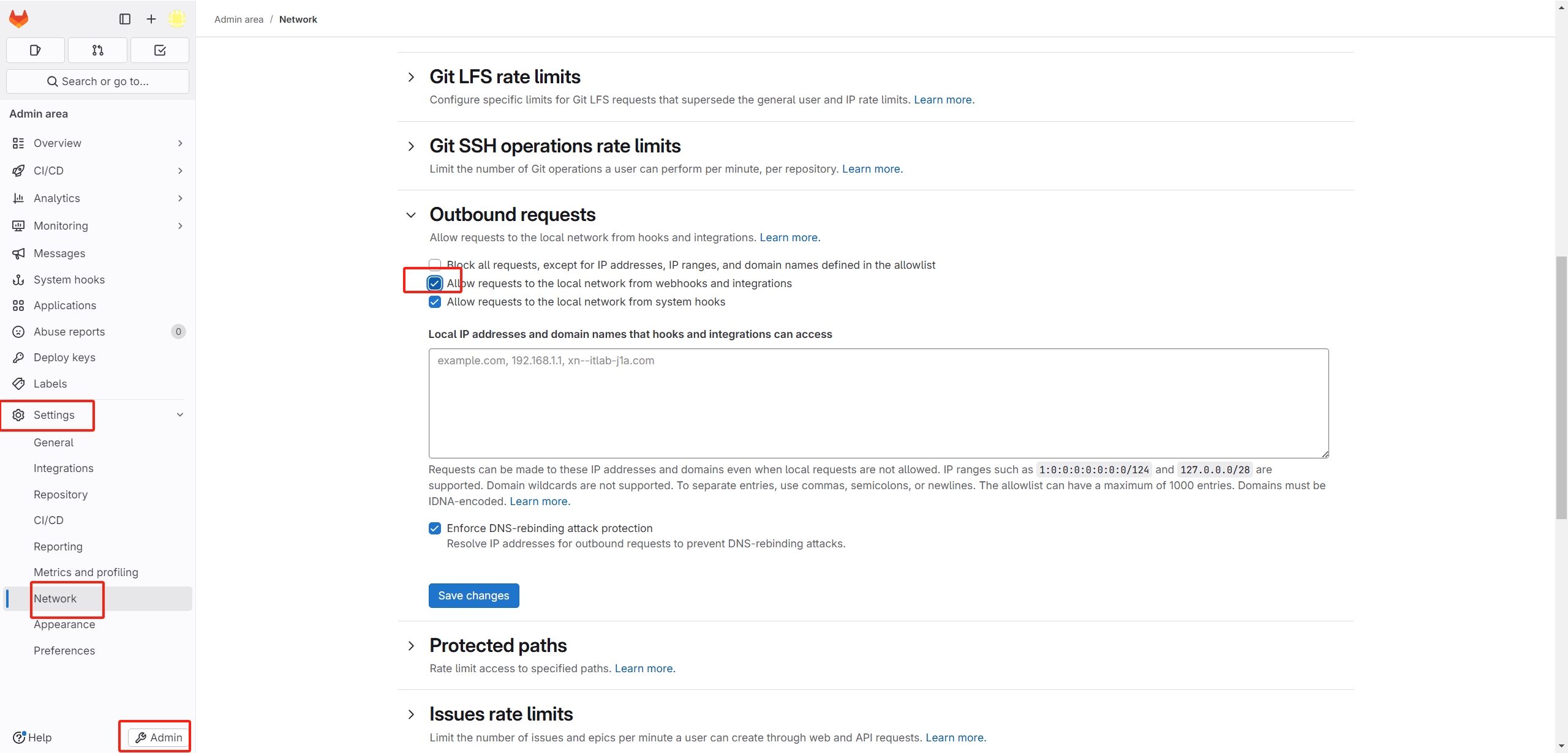

回到Gitlab192.168.1.240,点击Menu->Admin->setting->Network->Outbound requests ,勾选Allow requests to the local network from web hooks and services,允许外部⽹络远程调⽤Webhook

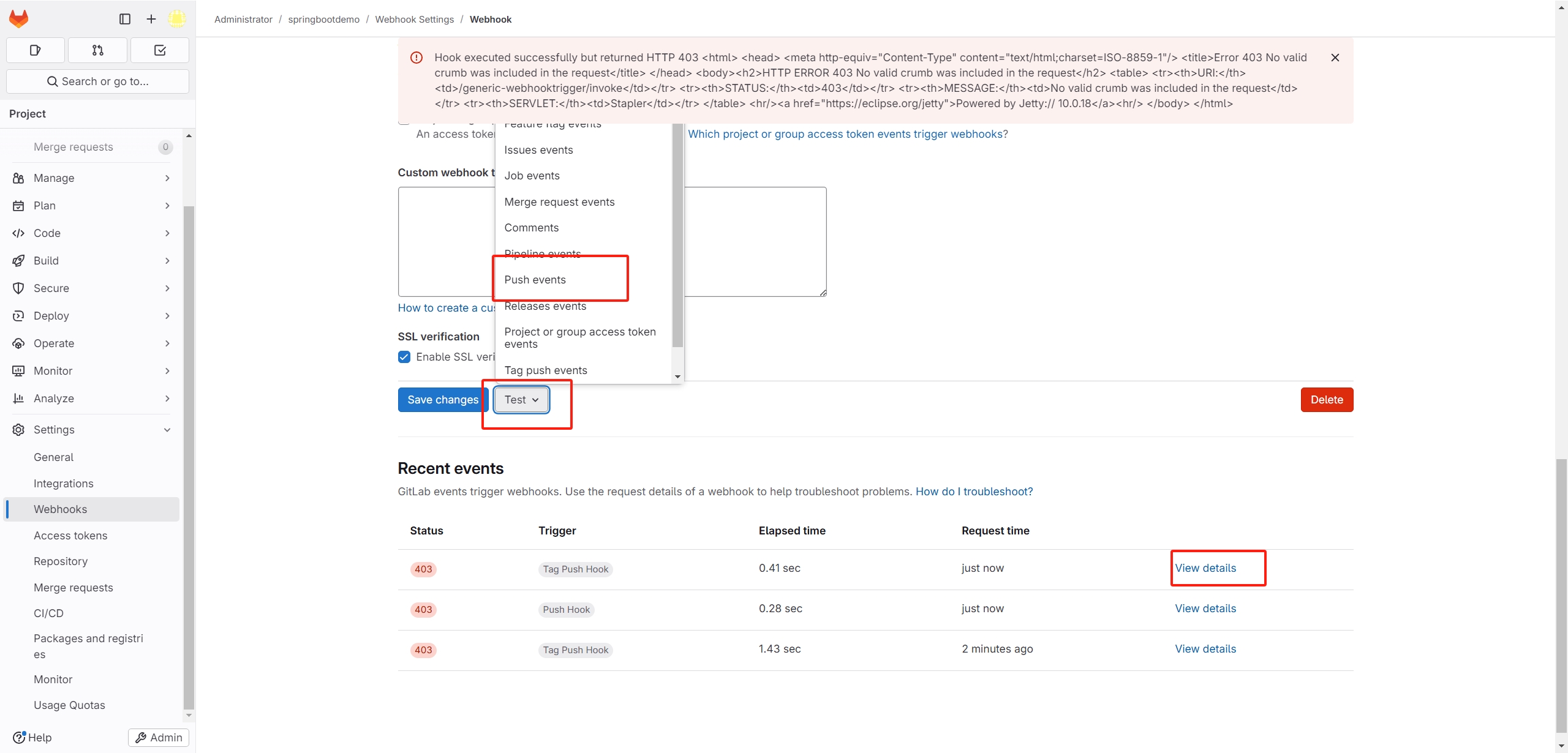

启⽤Webhook,选择springboot-demo->Settings->Webhooks,输⼊项:

URL:http://192.168.1.241:8080/generic-webhook-trigger/invoke

Secret token:123

Trigger:Tag push events

在下⽅的Project Hooks点击Test,触发Tag push events,测试环境

⽬前构建因为没有传递Tag变量所以构建会失败

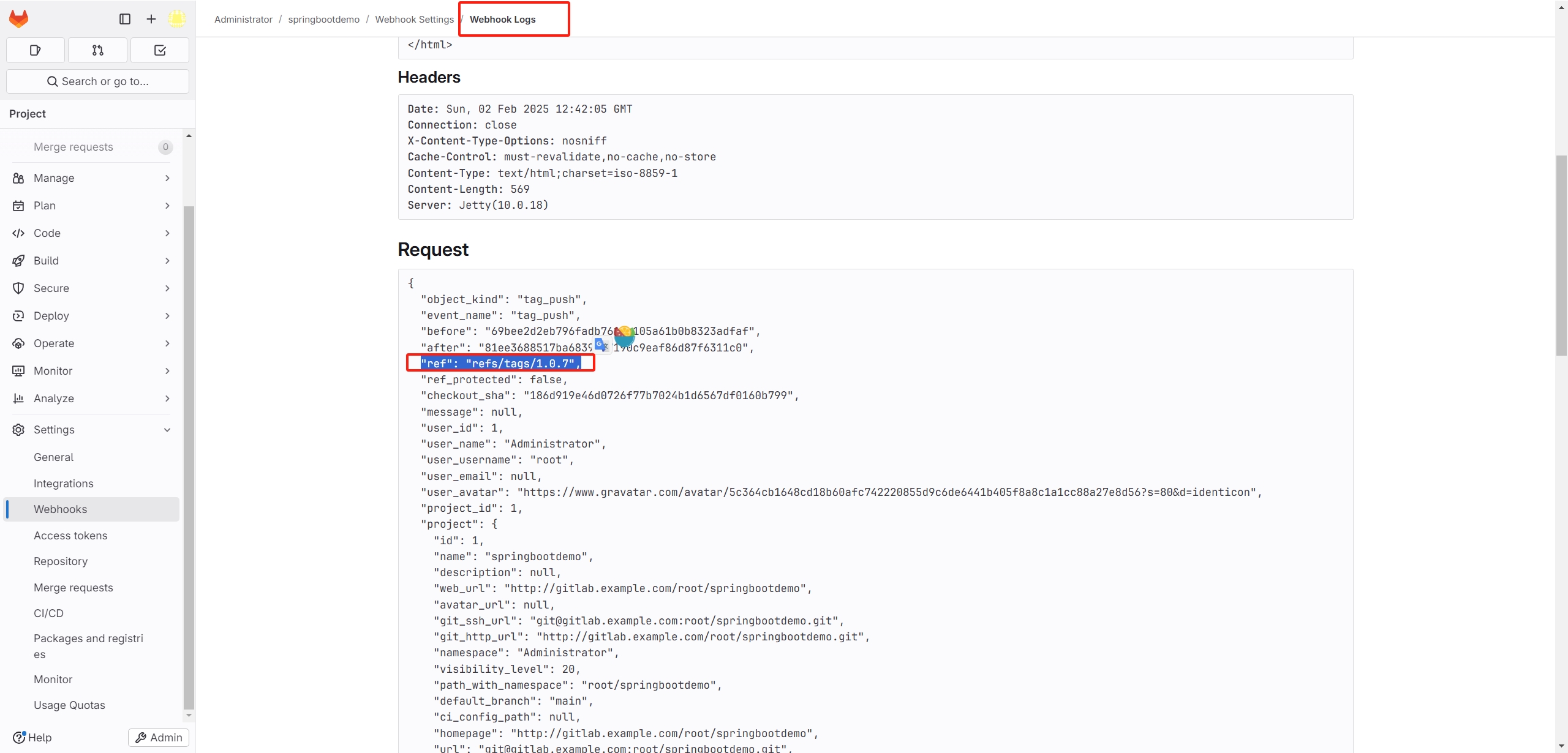

在Gitlab Project Hooks点Edit,可以查看发送Webhook的明细

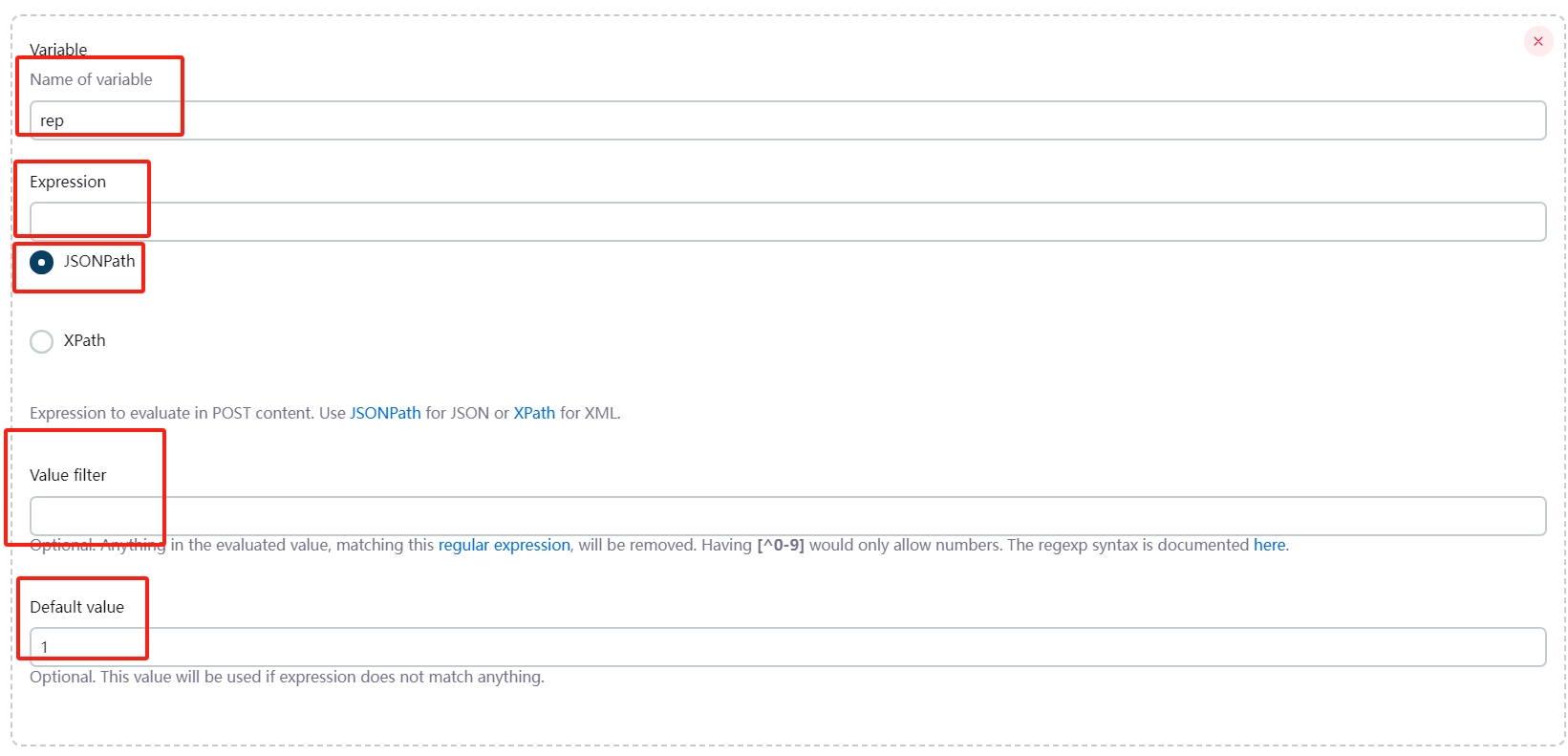

在Webhook请求头中ref项包含的发布的Tag,将其截取出来赋给Jenkins Tag变量即可

定义tag变量,值来⾃请求体的ref,同时忽略掉refs/tags/前缀,只保

留1.0.7部分,如果没有这个选项,则tag默认值为1.0.0

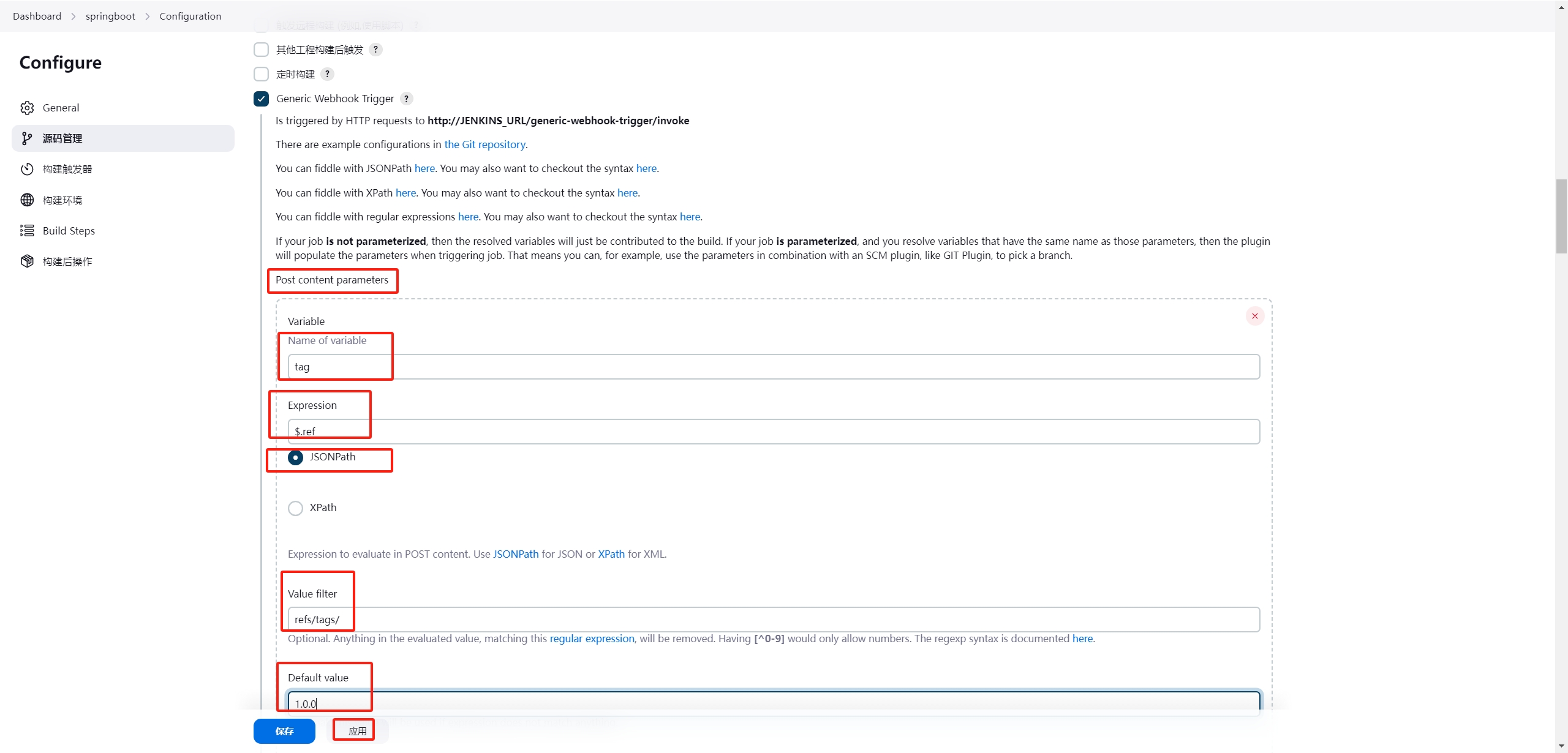

在Jenkins Webhook⾯板选择Post Content Parameters

Variable:tag

Expression:$.ref

Type:JSONPath

Value filter:refs/tags/

Default value:1.0.0

新建tag后根据版本号构建成功200

Helm:Kubernetes应⽤包管理⼯具

对于复杂的应⽤或者中间件系统,在K8s上容器化部署通常需要研究Docker镜像的运⾏需求、环境变量等内容,为容器配置依赖的存储、⽹络等资源,并设计和编写Deployment、ConfigMap、Service、Volume、Ingress等YAML⽂件,再将其依次提交给Kubernetes部署。总之,微服务架构和容器化给复杂应⽤的部署和管理都带来了很⼤的挑战。

Helm⽤于对需要在Kubernetes 上部署的复杂应⽤进⾏定义、安装和更新,

Helm将Kubernetes的资源如Deployment、Service、ConfigMap、Ingress等,打包到⼀个Chart(图表)中,保存到Chart仓库,由Chart仓库存储、分发和共享。Helm⽀持应⽤Chart的版本管理,简化了Kubernetes应⽤部署的应⽤定义、打包、部 署、更新、删除和回滚等操作。 Helm通过将各种Kubernetes资源打包,类似Linux的apt-get或yum⼯具,来完成复杂软件的安装和部署,并且⽀持部署实例的版本管理等

yum install -y wget

mkdir -p /usr/local/helm

cd /usr/local/helm

wget --no-check-certificate https://manongbiji.oss-cn-beijing.aliyuncs.com/ittailkshow/devops/download/helm-v3.10.0-linux-amd64.tar.gz

tar zxvf helm-v3.10.0-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

# 安装nginx https://artifacthub.io/packages/helm/bitnami/nginx

helm uninstall my-nginx

helm repo add bitnami https://charts.bitnami.com/bitnami

helm install my-nginx bitnami/nginx --version 13.2.10 --set replicaCount=2

helm list

helm status my-nginx

kubectl get deploy

kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 10h

my-nginx LoadBalancer 10.110.29.244 <pending> 80:30633/TCP 96s

# 访问http://192.168.1.177:30633

helm Templates

Helm Values

更新配置,set设置变量

helm upgrade my-nginx bitnami/nginx --version 13.2.10 --set replicaCount=3

构建Helm私有仓库发布⾃定义Charts

https://github.com/helm/chartmuseum

184服务器新建ChartMuseum

mkdir -p /usr/local/charts

docker run --rm -itd \

-e DEBUG=1 \

-e STORAGE=local \

-e STORAGE_LOCAL_ROOTDIR=/charts \

-v /usr/local/charts:/charts \

--name chartmuseum \

--network macvlan31 --ip=192.168.1.242 \

ghcr.io/helm/chartmuseum:v0.14.0

# 授权,否则上传charts时报权限不够

chmod -R 777 /usr/local/charts

在177 K8S Master服务器安装Helm

yum install -y wget

mkdir -p /usr/local/helm

cd /usr/local/helm

wget --no-check-certificate https://manongbiji.oss-cn-beijing.aliyuncs.com/ittailkshow/devops/download/helm-v3.10.0-linux-amd64.tar.gz

tar zxvf helm-v3.10.0-linux-amd64.tar.gz

mv -f linux-amd64/helm /usr/local/bin/helm

# 创建myproject-charts,并将默认的版本号与APP版本号替换为1.0.0与stable

mkdir -p /usr/local/charts

cd /usr/local/charts

rm -rf springbootdemo-charts

helm create springbootdemo-charts

cd /usr/local/charts/springbootdemo-charts

sed -i 's/version: 0.1.0/version: 1.0.0/' /usr/local/charts/springbootdemo-charts/Chart.yaml

sed -i 's/appVersion: \"1.16.0\"/appVersion: stable/' /usr/local/charts/springbootdemo-charts/Chart.yaml

cd /usr/local/charts/springbootdemo-charts/templates

# 移除所有templates⽬录下⽂件,新建部署与服务YAML脚本

rm -rf *

cat > deployment.yaml <<-'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: springbootdemo-deployment

spec:

replicas: 2

selector:

matchLabels:

app: springbootdemo-pod

template:

metadata:

labels:

app: springbootdemo-pod

spec:

containers:

- name: springbootdemo

image: 192.168.1.155:80/public/springbootdemo:1.0.9

ports:

- containerPort: 8081

EOF

cat > service.yaml <<-'EOF'

apiVersion: v1

kind: Service

metadata:

name: springbootdemo-service

spec:

type: NodePort

ports:

- port: 80

targetPort: 8081

nodePort: 31005

name: springbootdemo-port

selector:

app: springbootdemo-pod

EOF

# 执⾏helm package打包,并将其上传⾄242Helm Museum私服

cd /usr/local/charts/springbootdemo-charts

helm package .

curl -X DELETE http://192.168.1.242:8080/api/charts/springbootdemo-charts/1.0.0

curl --data-binary "@springbootdemo-charts-1.0.0.tgz" http://192.168.1.242:8080/api/charts

# 上传成功后通过helm install命令⾃动安装myproject-charts,完成应⽤发布

helm uninstall springbootdemo

kubectl delete deployment springbootdemo-deployment

kubectl delete service springbootdemo-service

helm repo add my-repo http://192.168.1.242:8080/

helm repo update

helm search repo my-repo

helm install springbootdemo my-repo/springbootdemo-charts

helm list

基于Charts模板实现版本动态发布

利⽤values.yaml脚本参数化

# 重写values.yaml,设置必要的选项

cat > /usr/local/charts/springbootdemo-charts/values.yaml <<-'EOF'

replicas: 3

tag: 1.0.9

nodePort: 31005

EOF

# 在部署脚本中利⽤{{.Values.xxx}}加载对应的选项

cat > /usr/local/charts/springbootdemo-charts/templates/deployment.yaml <<-'EOF'

apiVersion: apps/v1

kind: Deployment

metadata:

name: springbootdemo-deployment

spec:

replicas: {{.Values.replicas}}

selector:

matchLabels:

app: springbootdemo-pod

template:

metadata:

labels:

app: springbootdemo-pod

spec:

containers:

- name: springbootdemo

image: 192.168.1.155:80/public/springbootdemo:{{.Values.tag}}

ports:

- containerPort: 8081

EOF

# 在服务脚本中利⽤{{.Values.xxx}}加载对应的选项

cat > /usr/local/charts/springbootdemo-charts/templates/service.yaml <<-'EOF'

apiVersion: v1

kind: Service

metadata:

name: springbootdemo-service

spec:

type: NodePort

ports:

- port: 80

targetPort: 8081

nodePort: {{.Values.nodePort}}

name: springbootdemo-port

selector:

app: springbootdemo-pod

EOF

# 删除原有旧版本的Helm-Charts,重新打包,重新发布

curl -X DELETE http://192.168.1.242:8080/api/charts/springbootdemo-charts/1.0.0

cd /usr/local/charts/springbootdemo-charts/

helm package .

curl --data-binary "@springbootdemo-charts-1.0.0.tgz" http://192.168.1.242:8080/api/charts

# 在177服务器运⾏调整replicas与tag,完成版本动态变更。注意执⾏repo update清缓存

helm uninstall springbootdemo

kubectl delete deployment springbootdemo-deployment

kubectl delete service springbootdemo-service

helm repo add my-repo http://192.168.1.242:8080/

helm repo update

helm install springbootdemo my-repo/springbootdemo-charts --set replicas=1 --set tag=1.0.9

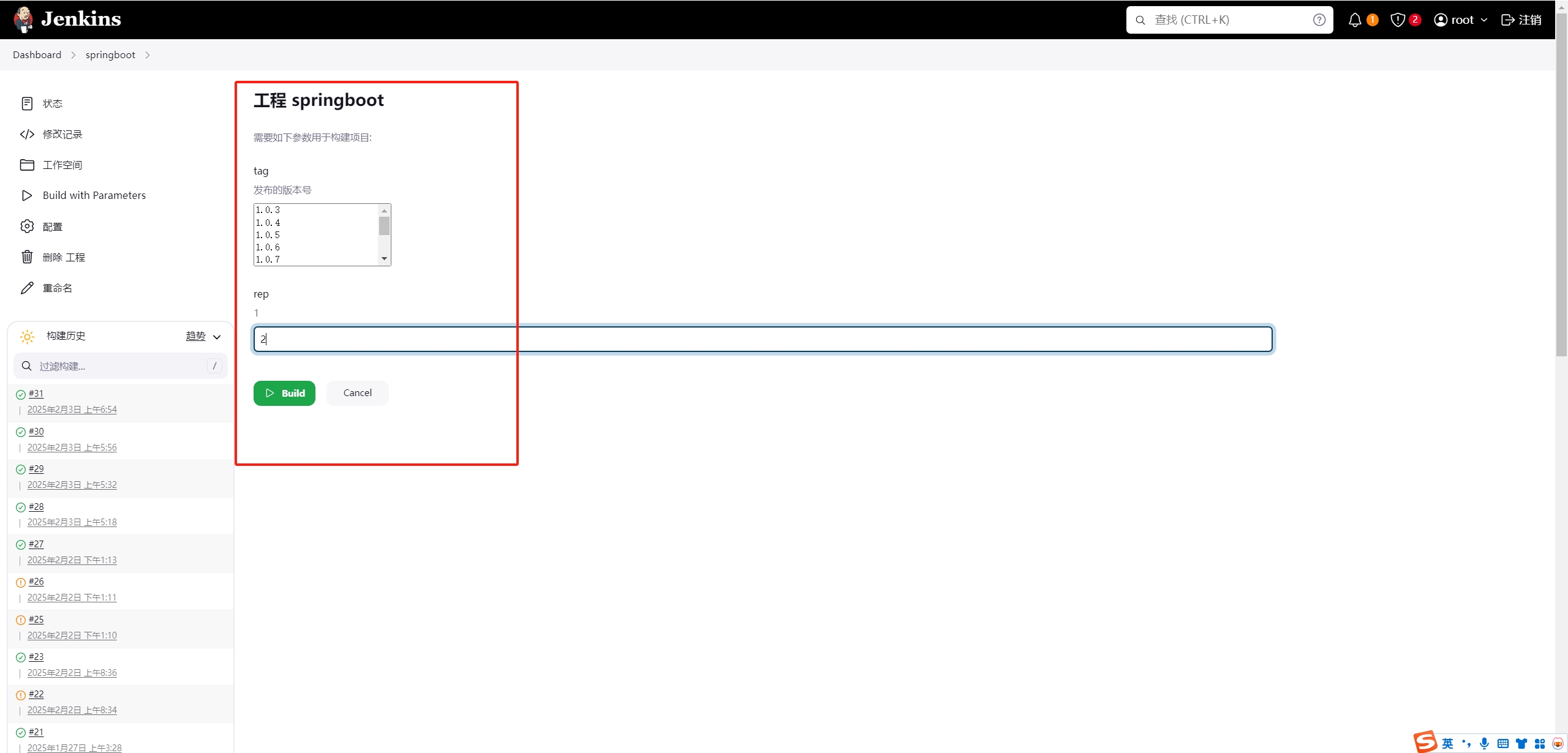

基于Helm实施Jenkins动态发布

利⽤Helm结合Jenkins流⽔线动态发布

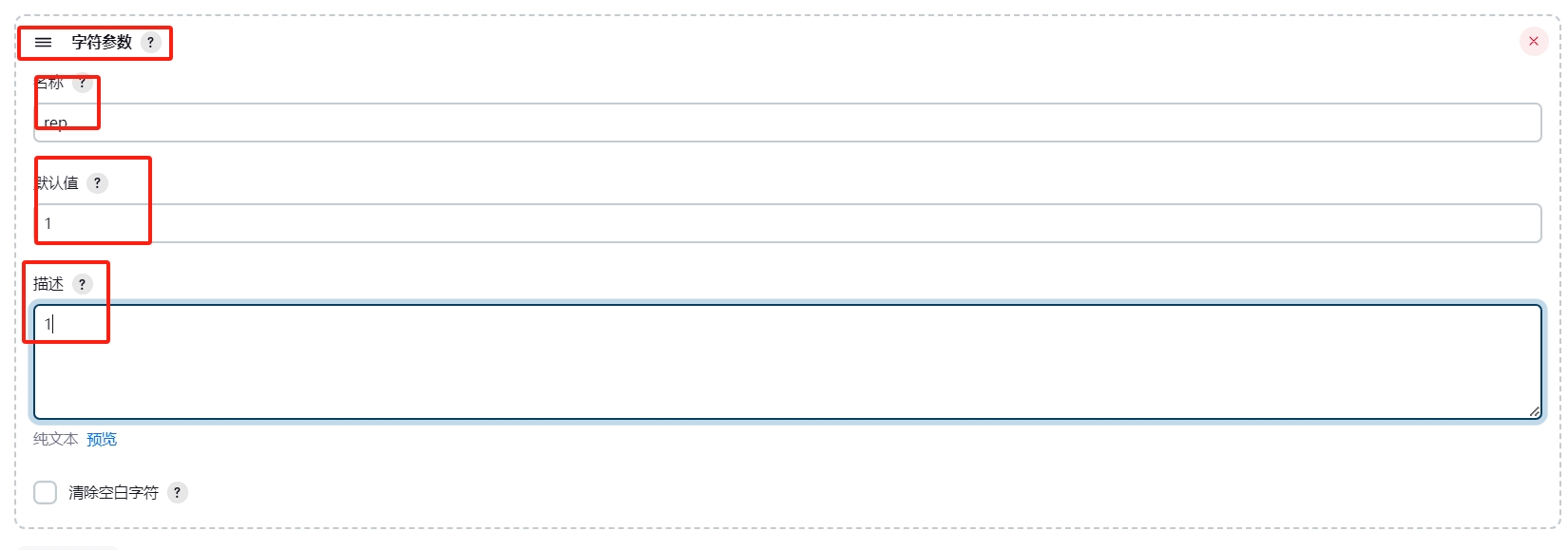

两个变量$replicas与$tag,在Jenkins配置界⾯Git Parameters中对其设置 新增字符参数replicas,默认值为1

名称:replicas

默认值:1

描述:发布数量

在Webhook位置也增加replicas

Variable:replicas

Expression:(nil)

Type:JSONPath

Default value:1

⼿动构建时会产⽣两个输⼊项

也可以在构建时,选择参数查看当时数据

Jenkins 最后阶段调整为Helm Install,添加参数rep

helm uninstall springbootdemo

kubectl delete deployment springbootdemo-deployment

kubectl delete service springbootdemo-service

helm repo add my-repo http://192.168.1.242:8080/

helm repo update

helm install springbootdemo my-repo/springbootdemo-charts --set replicas=$rep --set tag=$tag

Jenkins Pipeline脚本最后阶段调整为Helm Install,添加参数rep

完整脚本

pipeline {

agent any

stages {

stage('Pull SourceCode') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: '$tag']],

extensions: [],

userRemoteConfigs: [[url: 'http://192.168.1.240/root/springbootdemo']]

])

}

}

stage('Maven Build') {

steps {

sh '/usr/local/maven/bin/mvn package'

}

}

stage('Publish Harbor Image') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'harbor-155',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'target',

sourceFiles: 'target/*.jar'

),

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''docker build -t 192.168.1.155:80/public/springbootdemo:$tag /usr/local/

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:$tag''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'docker',

sourceFiles: 'docker/*'

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

stage('Run Container') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'K8S-Master-177',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''helm uninstall springbootdemo

kubectl delete deployment springbootdemo-deployment

kubectl delete service springbootdemo-service

helm repo add my-repo http://192.168.1.242:8080/

helm repo update

helm install springbootdemo my-repo/springbootdemo-charts --set replicas=$rep --set tag=$tag''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: '',

sourceFiles: 'deployment.yml'

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

}

}

Helm流⽔线发布的补充修正

对Helm打包、上传的⼯作交给Jenkins,⽽不应由K8S Master完成。

因此要针对Jenkins容器内安装Helm,完成Helm打包上传

将前⾯⽣成的charts/springbootdemo-charts复制到代码中,并上传⾄Gitlab

cd /usr/bin/

# 下载我准备好的Helm

wget --no-check-certificate https://manongbiji.oss-cn-beijing.aliyuncs.com/ittailkshow/devops/download/helm

#复制到Jenkins容器内部,Helm会⾃动启⽤,记得授权

chomd 777 /usr/bin/helm

docker cp /usr/bin/helm jenkins:/usr/bin/helm

调整流⽔线脚本,在Maven build stage后⾯增加下⾯的阶段,完成Helm Package与上传私服

stage('Helm Build&Upload') {

steps {

sh 'curl -X DELETE http://192.168.1.242:8080/api/charts/springbootdemo-charts/1.0.0'

sh 'helm package ./charts/springbootdemo-charts'

sh 'curl --data-binary \"@springbootdemo-charts-1.0.0.tgz\" http://192.168.1.242:8080/api/charts'

}

}

完整脚本

pipeline {

agent any

stages {

stage('Pull SourceCode') {

steps {

checkout([

$class: 'GitSCM',

branches: [[name: '$tag']],

extensions: [],

userRemoteConfigs: [[url: 'http://192.168.1.240/root/springbootdemo']]

])

}

}

stage('Maven Build') {

steps {

sh '/usr/local/maven/bin/mvn package'

}

}

stage('Helm Build&Upload') {

steps {

sh 'curl -X DELETE http://192.168.1.242:8080/api/charts/springbootdemo-charts/1.0.0'

sh 'helm package ./charts/springbootdemo-charts'

sh 'curl --data-binary \"@springbootdemo-charts-1.0.0.tgz\" http://192.168.1.242:8080/api/charts'

}

}

stage('Publish Harbor Image') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'harbor-155',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'target',

sourceFiles: 'target/*.jar'

),

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''docker build -t 192.168.1.155:80/public/springbootdemo:$tag /usr/local/

docker login -u admin -p Harbor12345 192.168.1.155:80

docker push 192.168.1.155:80/public/springbootdemo:$tag''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: 'docker',

sourceFiles: 'docker/*'

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

stage('Run Container') {

steps {

sshPublisher(publishers: [

sshPublisherDesc(

configName: 'K8S-Master-177',

transfers: [

sshTransfer(

cleanRemote: false,

excludes: '',

execCommand: '''helm uninstall springbootdemo

kubectl delete deployment springbootdemo-deployment

kubectl delete service springbootdemo-service

helm repo add my-repo http://192.168.1.242:8080/

helm repo update

helm install springbootdemo my-repo/springbootdemo-charts --set replicas=$rep --set tag=$tag''',

execTimeout: 120000,

flatten: false,

makeEmptyDirs: false,

noDefaultExcludes: false,

patternSeparator: '[, ]+',

remoteDirectory: '',

remoteDirectorySDF: false,

removePrefix: '',

sourceFiles: 'deployment.yml'

)

],

usePromotionTimestamp: false,

useWorkspaceInPromotion: false,

verbose: false

)

])

}

}

}

}

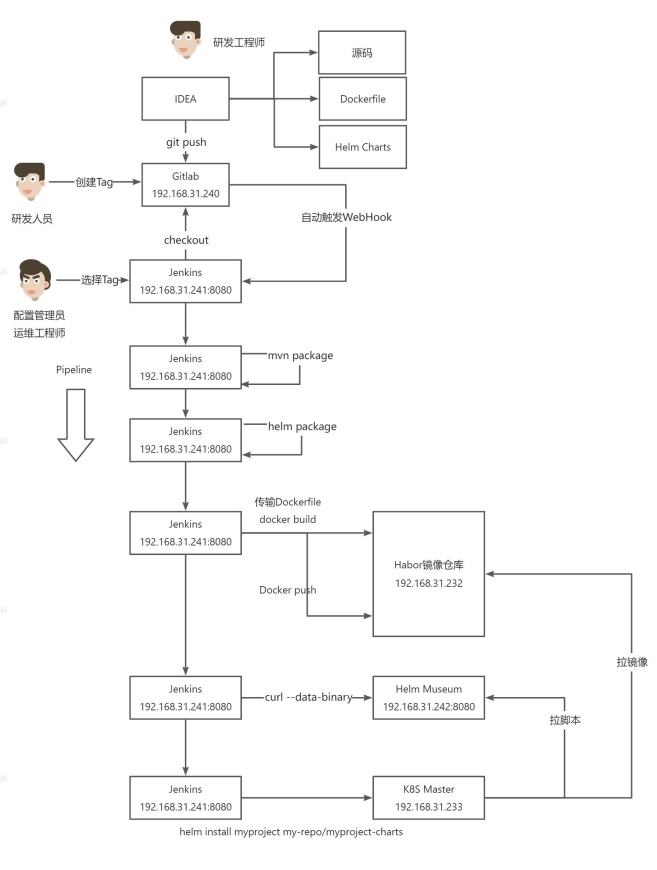

DevOps流⽔线总结

执行流程

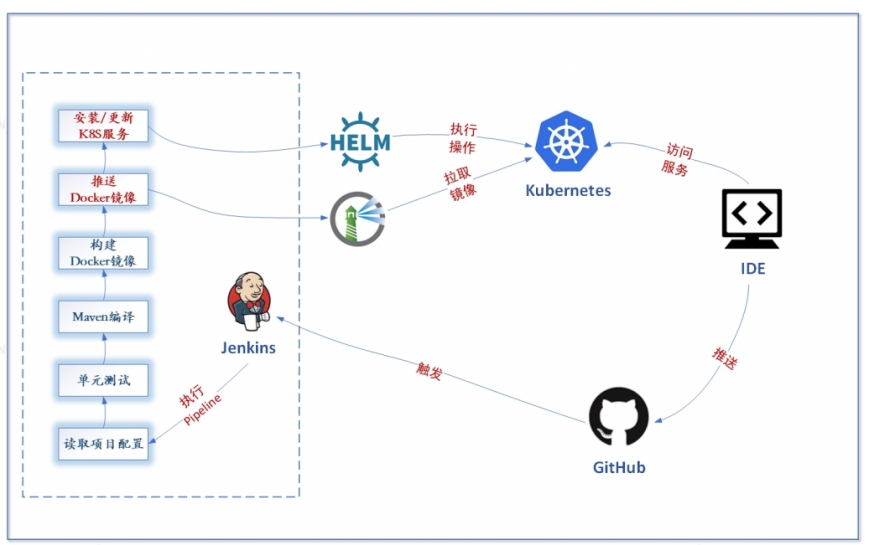

- IDEA推送代码的IDEA仓库,内含代码、Dockerfile、HelmCharts

- Gitlab接受到代码后,研发⼈员定义Tag,如配置WebHook,则⾃动触发Jenkins流⽔线

- 配置管理员、运维⼈员在Jenkins选择Tag,开始Jekins流⽔线。

a. Jenkins 从Gitlab checkout指定Tag的代码

b. Jenkins本地执⾏mvn package⽣成Jar包

c. Jenkins本地执⾏helm package⽣成helm-charts.tgz⽂件

d. Jenkins将Jar与Dockerfile发送到Harbor镜像服务器,先执⾏docker build构建镜像,再执⾏docker push将镜像推送是Habor仓库

e. Jenkins将本地⽣成的helm-chart.tgz通过curl --data-binary 上传⾄Helm Museum私服

f. Jenkins远程连接到K8S Master执⾏helm install,K8S Master先拉取Helm Charts,再执⾏脚本⾃动从Habor拉取Tag镜像,完整⾃动化发布流程

扩展点

- 每个步骤的正确性确认

- 部署结果通知,如⾃动发送Email、⾃动推送钉钉等

- 安全校验与RBAC权限

- K8S多环境的管理与发布

- 增加对Jenkins Pipeline的版本管理

- 其他各种细节...